Currently building Rekall.

I build, ride, and rave. If I'm not building or raving, you'll likely catch me shredding

the

gnar

on my mountain bike or skis.

Currently building Rekall.

I build, ride, and rave. If I'm not building or raving, you'll likely catch me shredding

the

gnar

on my mountain bike or skis.

Idea Entity

Software Engineer

Mar 2023 - Jun 2024

Jasmine Energy

Principle Engineer & Co-Founder

Jul 2022 - Feb 2023

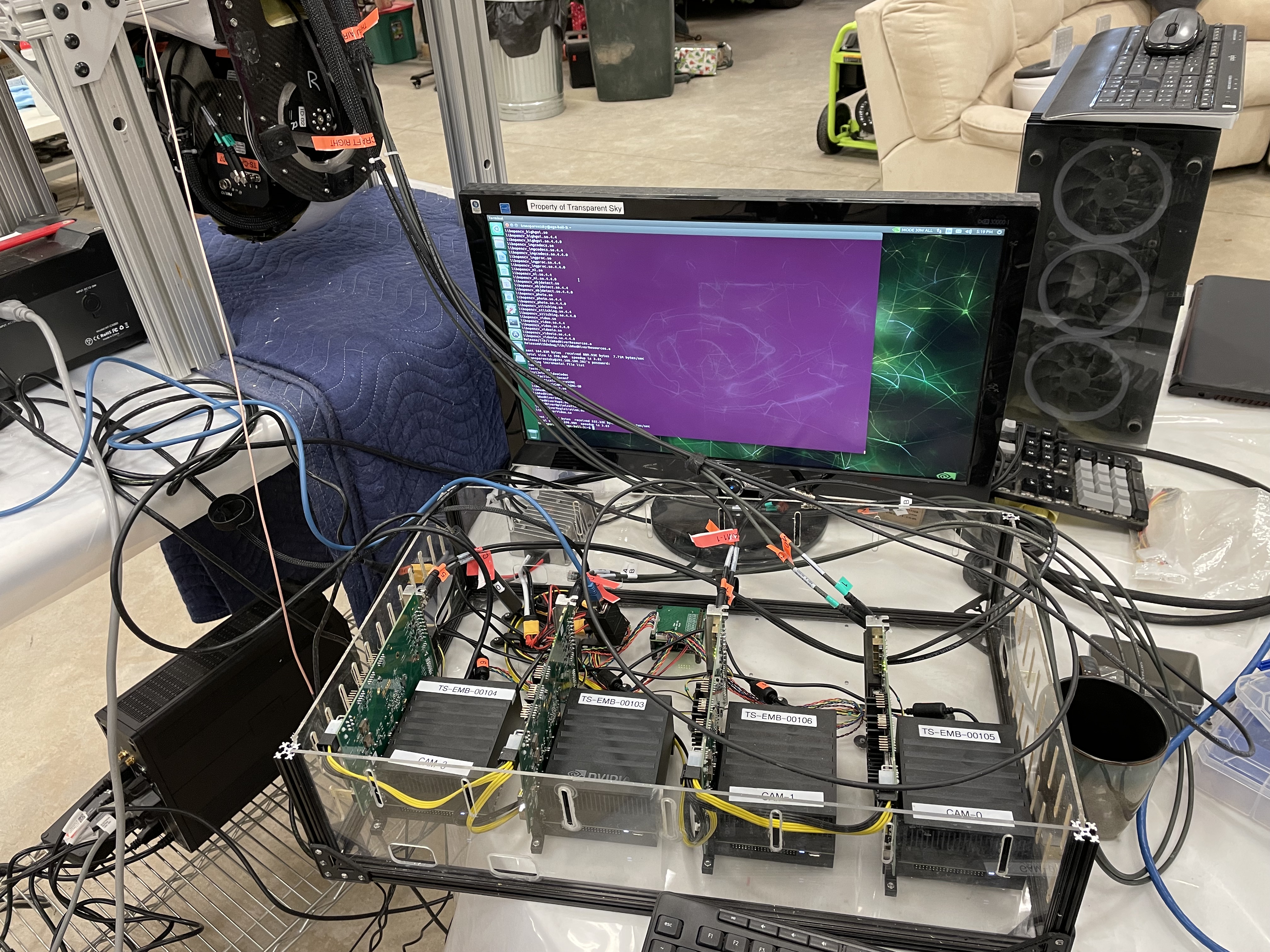

Transparent Sky

Machine Learning Lead / Research & Development Engineer

Aug 2020 - Jul 2022

Terbine

Front-End Web Developer

Oct 2017 - Mar 2020

Atlas Group LC

Full Stack Developer

Mar 2016 - Jul 2017

White Rabbit

Quality Assurance Engineer

Aug 2015 - Feb 2016

Hyman In Vivo Electrophysiology Lab

Undergraduate Researcher

Sep 2019 - Nov 2019

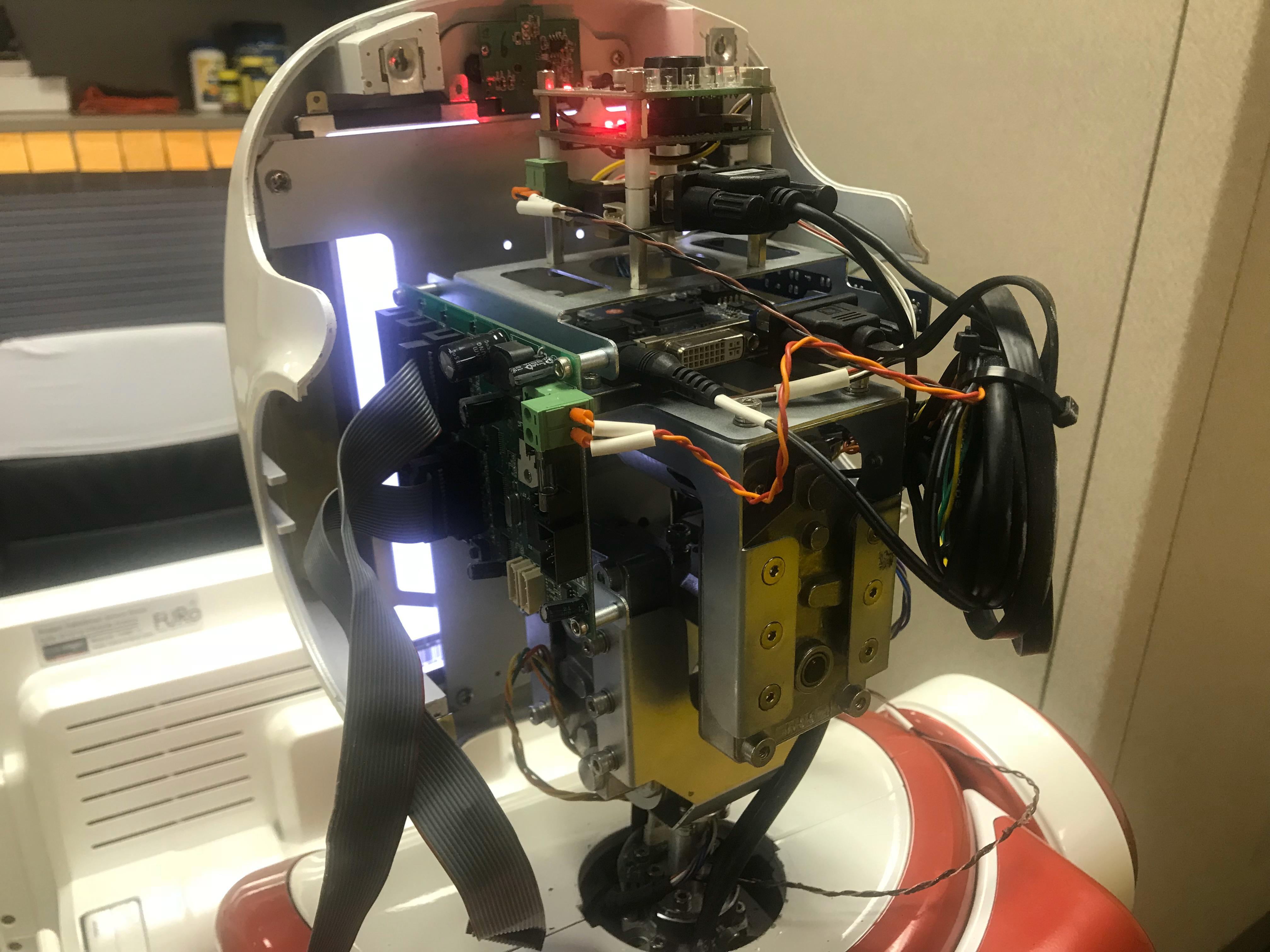

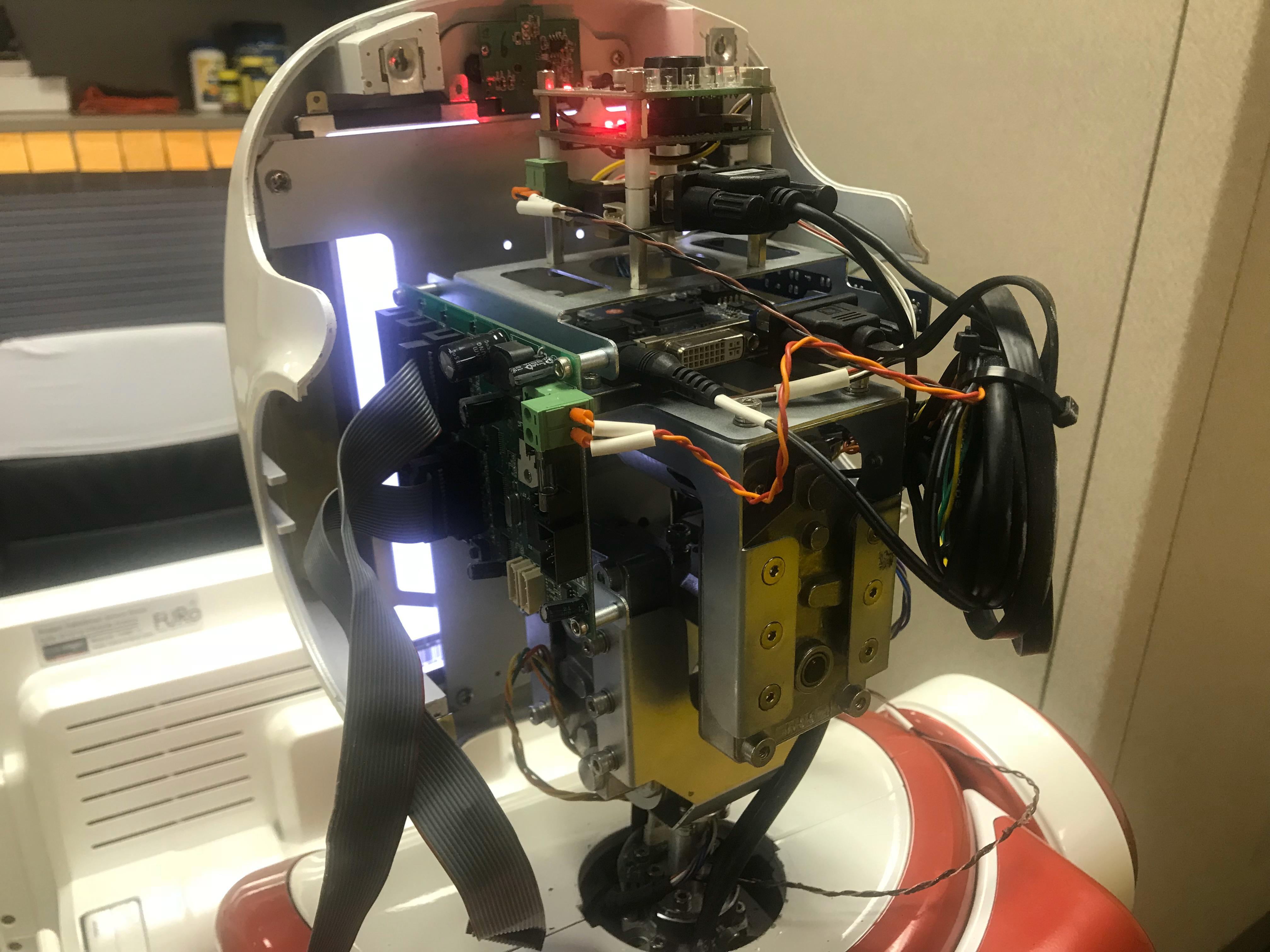

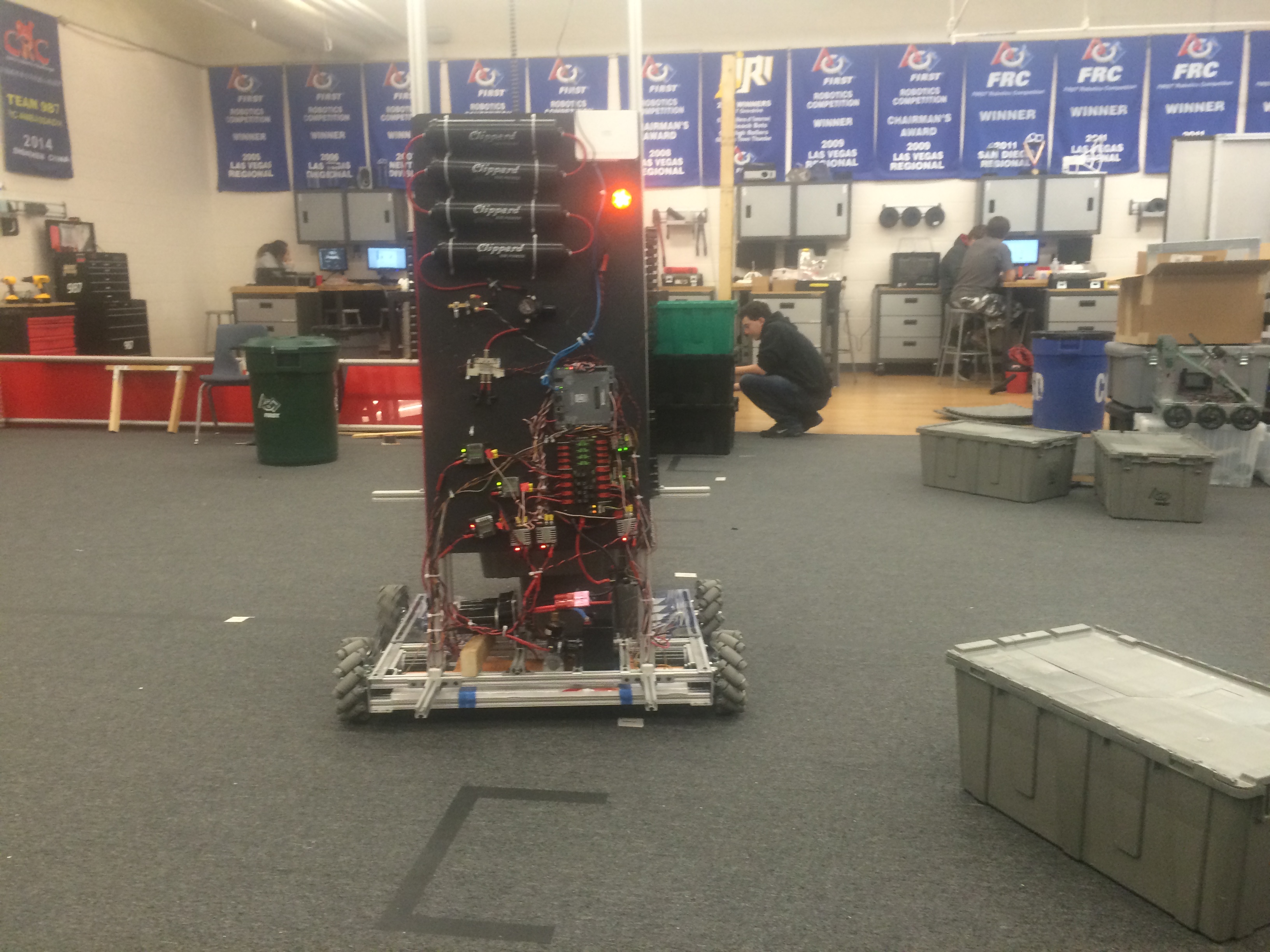

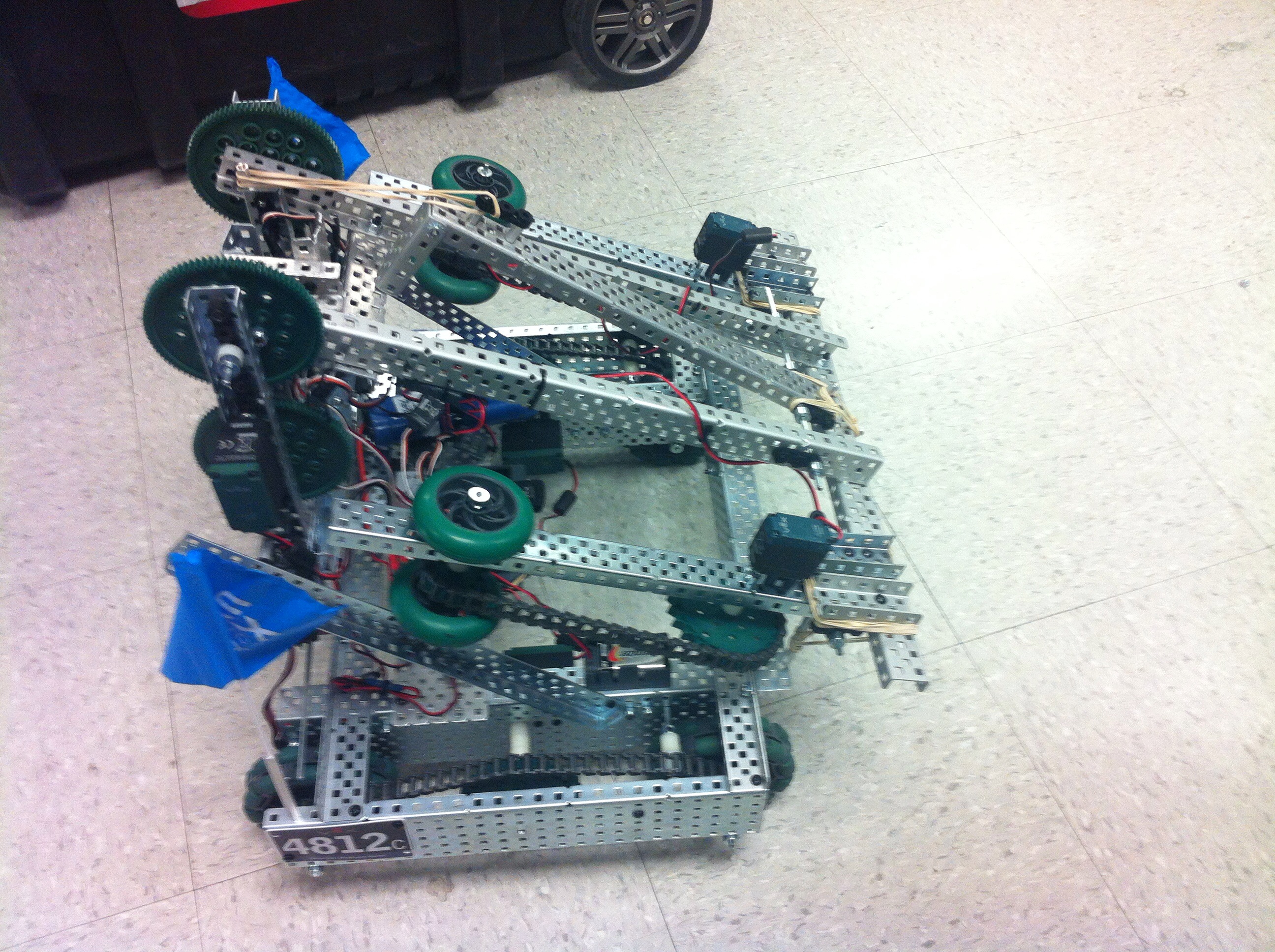

Collaborative Human-Robot Interaction Lab

Robotics REU Site Participant

Jun 2018 - Aug 2018

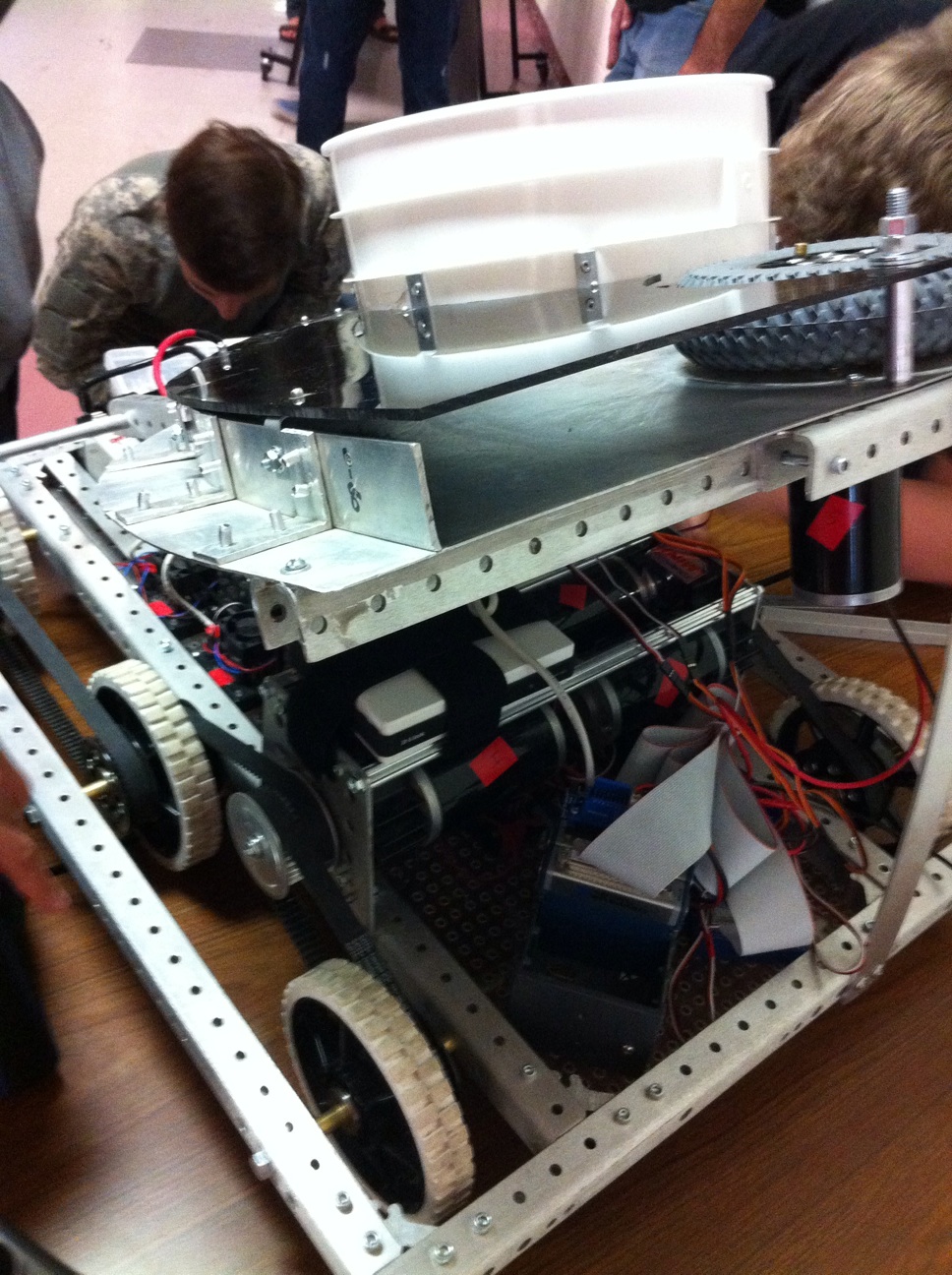

Drones & Autonomous Systems Laboratory

Undergraduate Researcher

Dec 2016 - Feb 2018

dApp V1

dApp V2

dApp Final

Random

Project Overview

As a Co-Founder and Senior Software Engineer at Jasmine Energy, I led the development of a blockchain-based platform for trading Energy Attribute Certificates (EACs), modernizing processes in a $10B market previously reliant on manual operations.Platform Development

I spearheaded the development of our decentralized application (dApp) on the Ethereum blockchain:- Architected and implemented the core smart contract infrastructure for tokenizing Renewable Energy Certificates (RECs).

- Developed a permissionless trading system enabling instant settlement of EAC transactions, replacing weeks-long manual processes.

- Created an automated regulatory compliance system that handles required filings and auditing when tokenized EACs are retired.

- Implemented real-time price discovery mechanisms to enhance market transparency and efficiency.

Technical Stack

Designed and implemented a comprehensive full-stack architecture:- Frontend Development:

- - Built with TypeScript and Node.js for type-safe, maintainable code

- - Integrated Web3.js for blockchain interaction and smart contract integration

- - Implemented comprehensive wallet support including MetaMask, Coinbase Wallet, and WalletConnect

- - Developed real-time transaction monitoring and state management systems

- Backend Infrastructure:

- - Created Python-based worker services for automated REC registration and processing

- - Developed event listeners for blockchain state changes and automated responses

- - Implemented secure API endpoints for interaction with various REC registries

- - Built automated systems for regulatory compliance and reporting

- Blockchain Integration:

- - Developed and deployed Ethereum smart contracts for EAC tokenization

- - Created custom libraries for handling complex blockchain transactions

- - Implemented gas optimization strategies for cost-effective contract deployment

- - Built robust error handling and transaction recovery systems

Technical Achievements

Led the technical implementation of several key platform features:- Designed and implemented the blockchain bridging system for converting traditional RECs into tokenized EACs.

- Developed smart contract architecture ensuring proper tracking and prevention of double-counting issues.

- Implemented a comprehensive audit trail system for tracking energy consumption and certificate ownership.

Business Impact

Our approach led to measurable improvements in the EAC market:- Reduced transaction settlement time from days to seconds through blockchain implementation.

- Decreased market costs by eliminating up to 30% middleman fees.

- Improved market efficiency by 25% through implementation of transparent pricing mechanisms.

- Reduced instances of double counting via on-chain token retirement tracking .

- Secured $2.1 million in seed funding after presenting our MVP at Y Combinator demo day.

Environmental Impact

The platform contributes to climate action by:- Facilitating faster and more efficient financing of renewable energy projects through improved market liquidity.

- Creating transparent price discovery mechanisms that help properly value renewable energy investments.

- Enabling new DeFi instruments that incentivize additional investment in renewable energy infrastructure.

- Supporting the global transition to clean energy by making EAC trading more accessible and efficient.

Cool Stuff

Look in the "Random" section of the gallery above to see Jasmine Energy in Times Square!Live3D / Textured Meshes

Machine Learning

3D Viewing Software

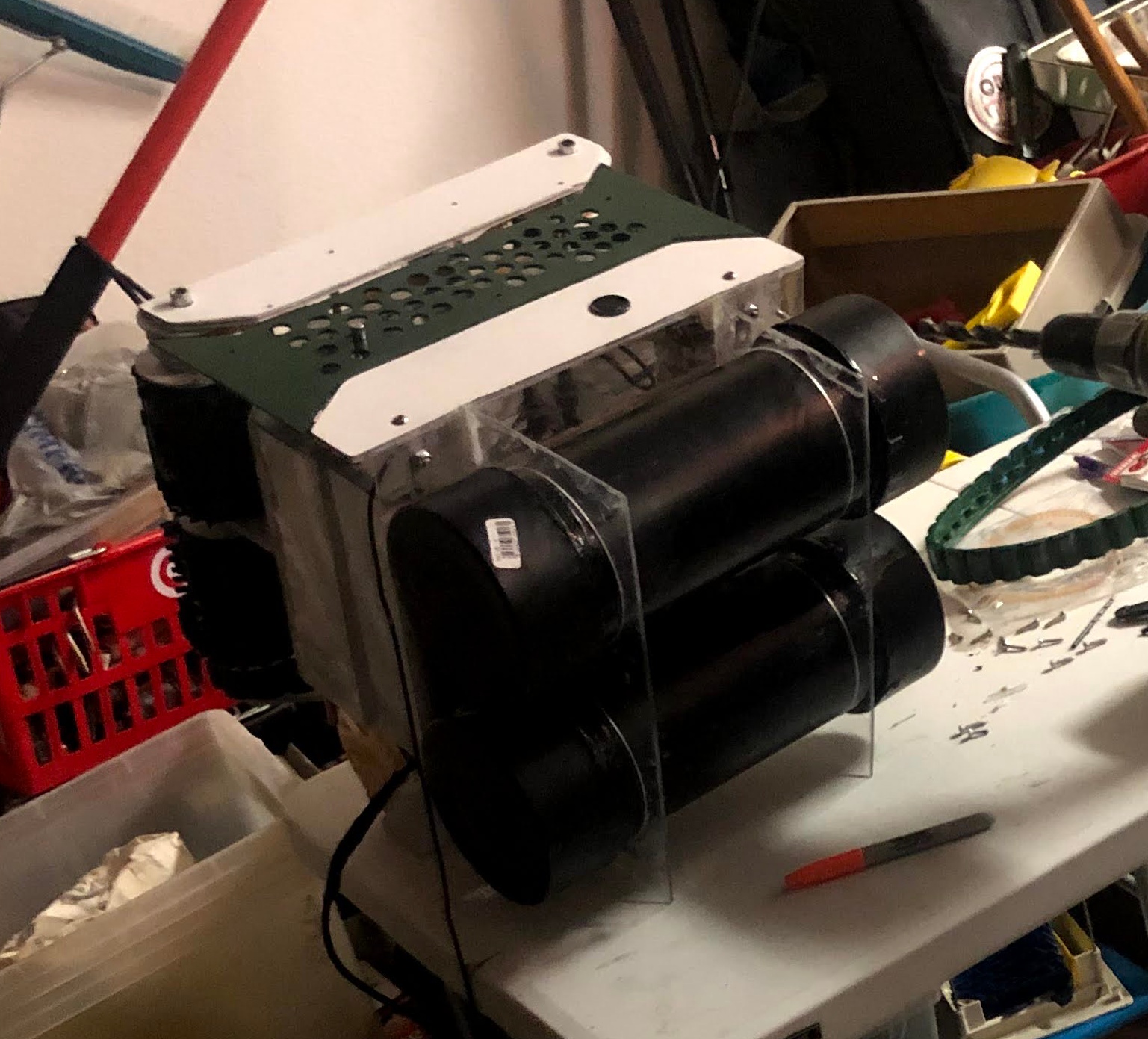

Hardware

Random

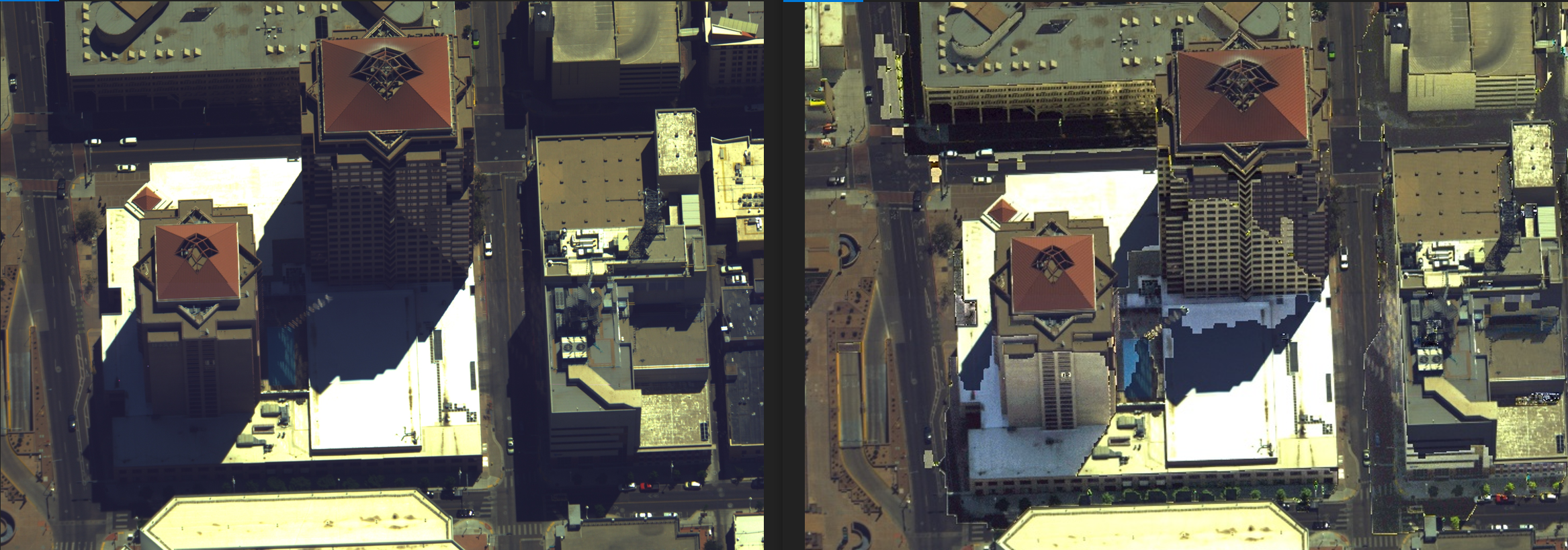

Project Overview

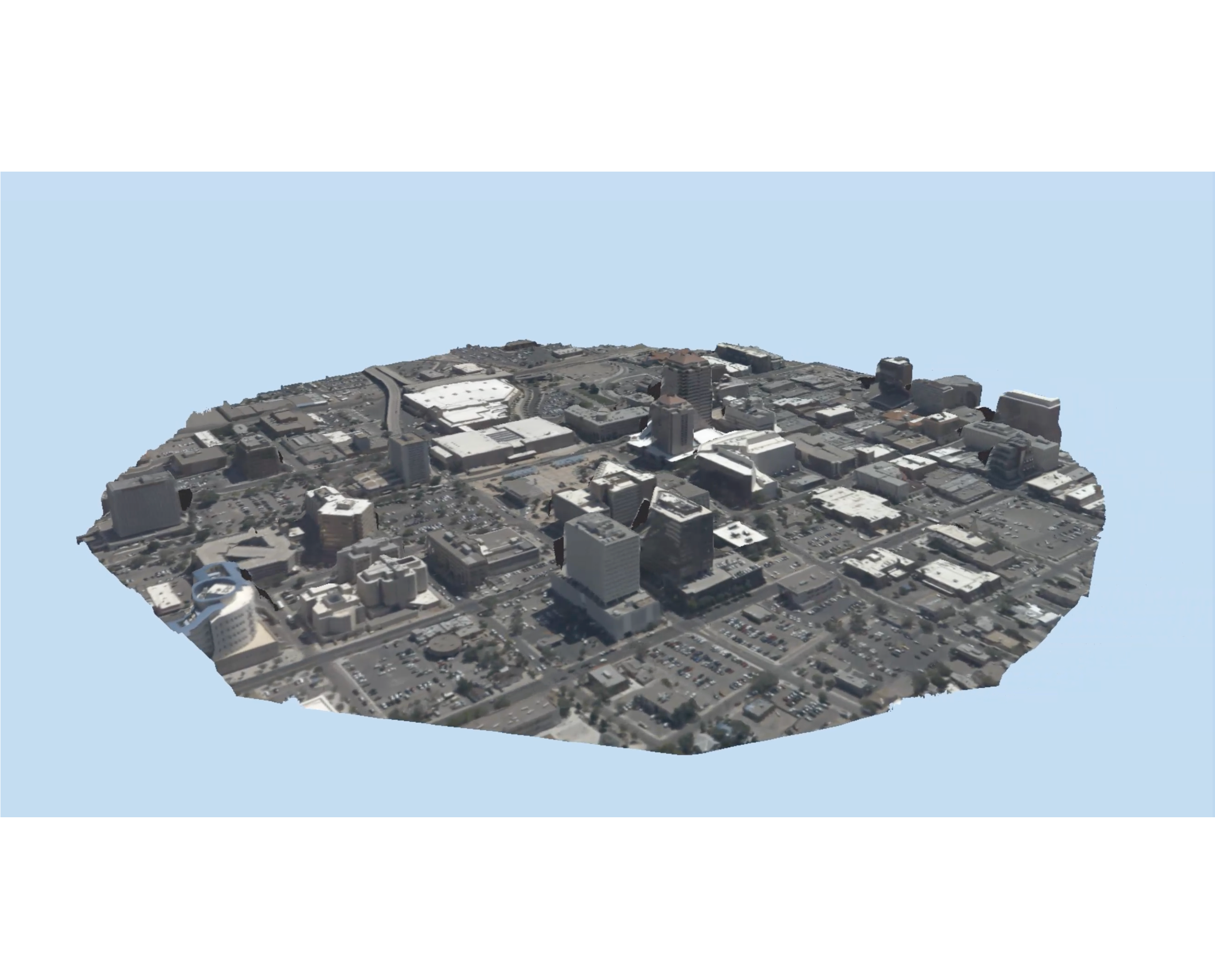

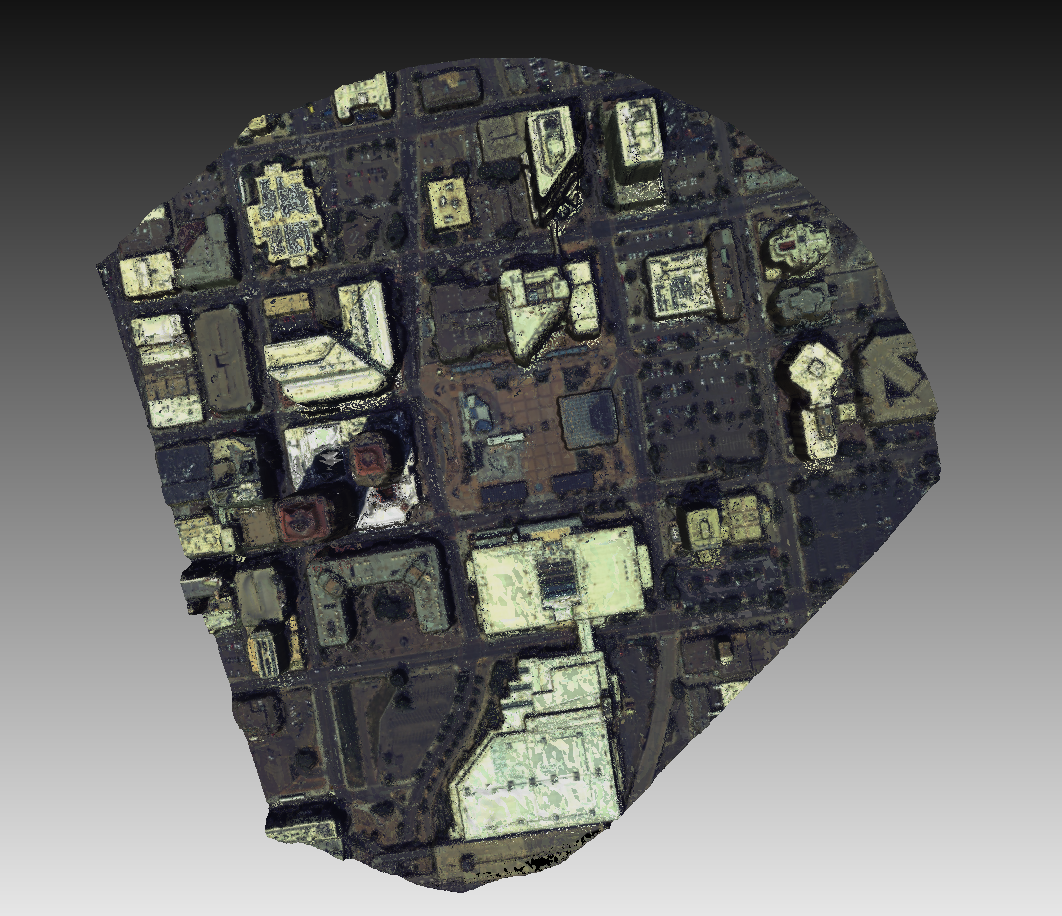

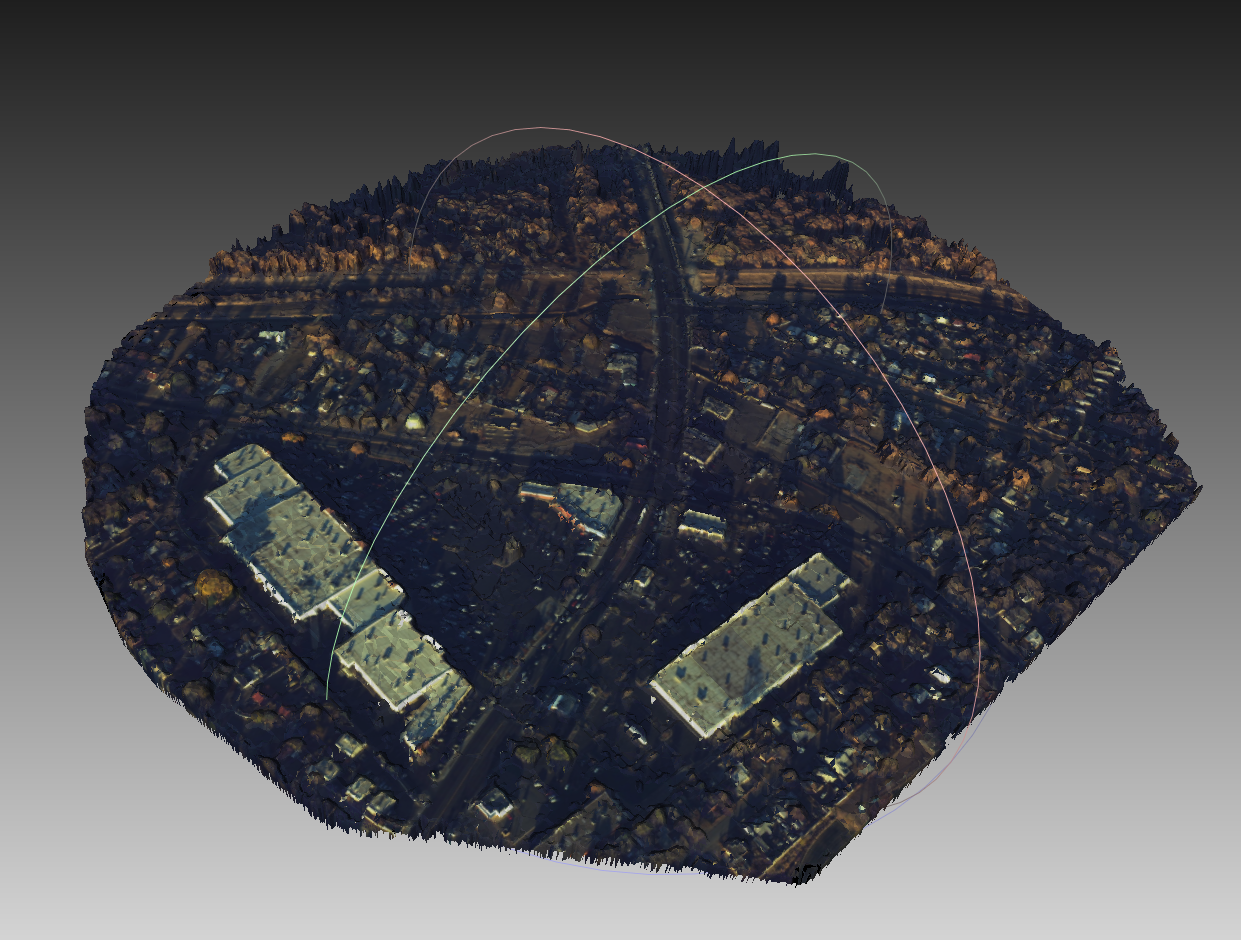

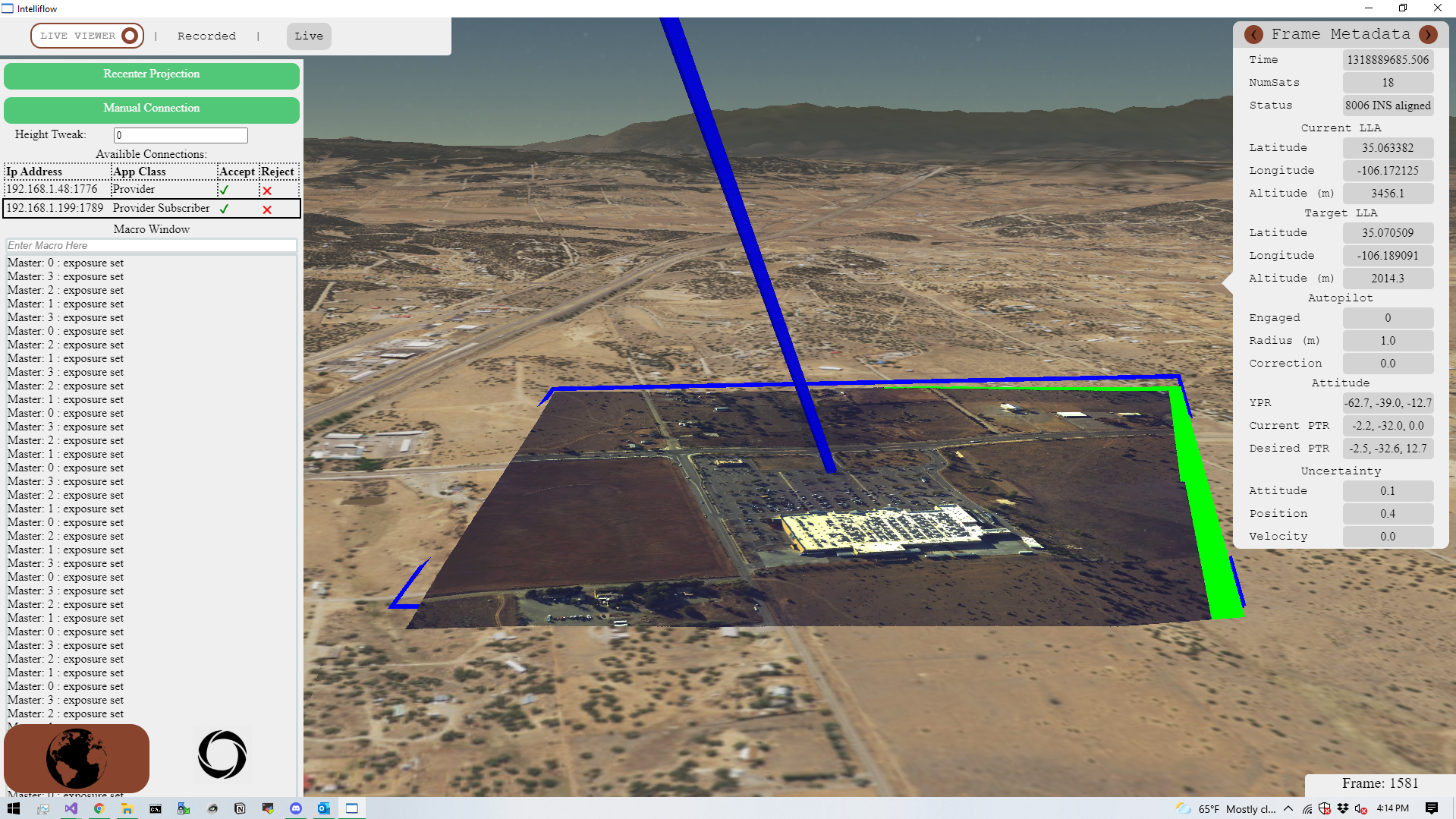

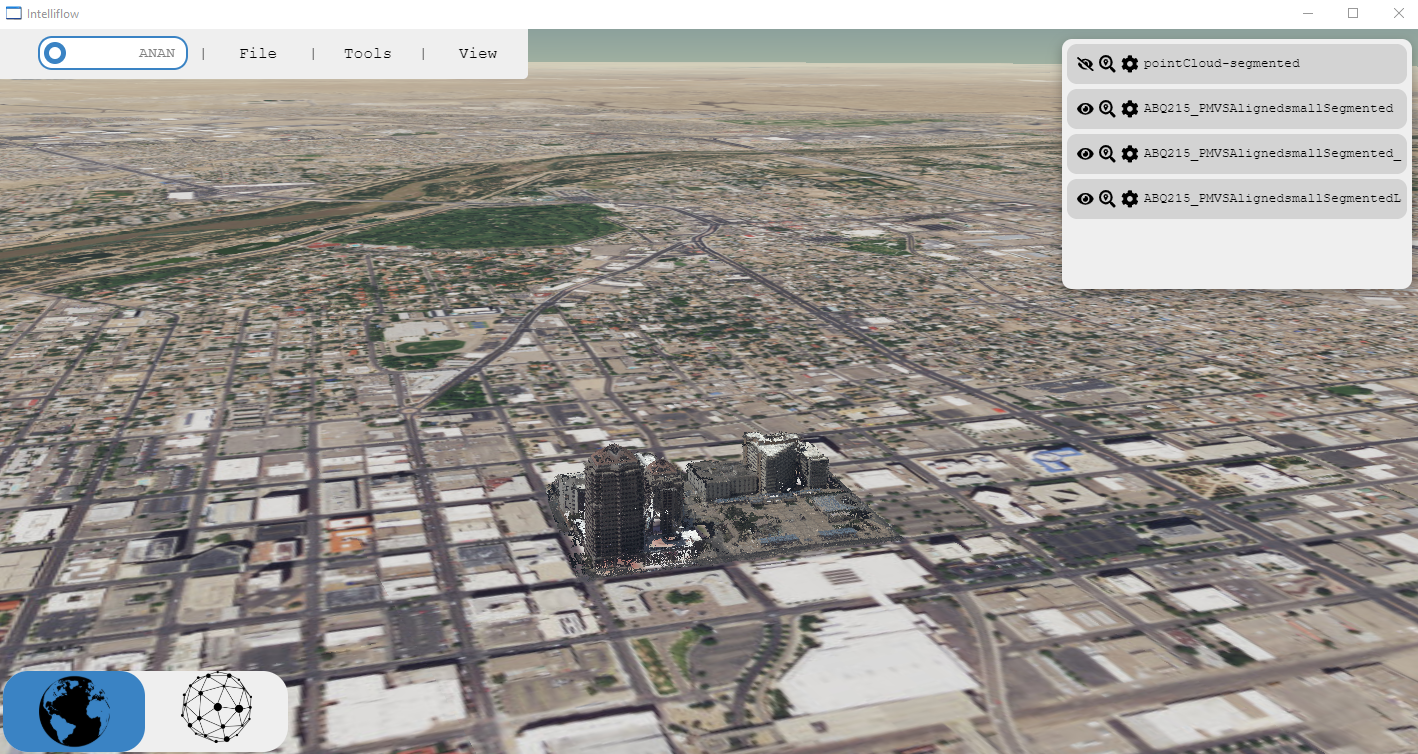

During my time at Transparent Sky, I worked on several projects focused on 3D data processing, machine learning, and visualization technologies.Live3D

I led the development of our Live3D technology, which enhanced the way we process and visualize surveillance data:- Engineered 3D reconstruction algorithms capable of producing high-quality meshes of entire cities in just 3 minutes.

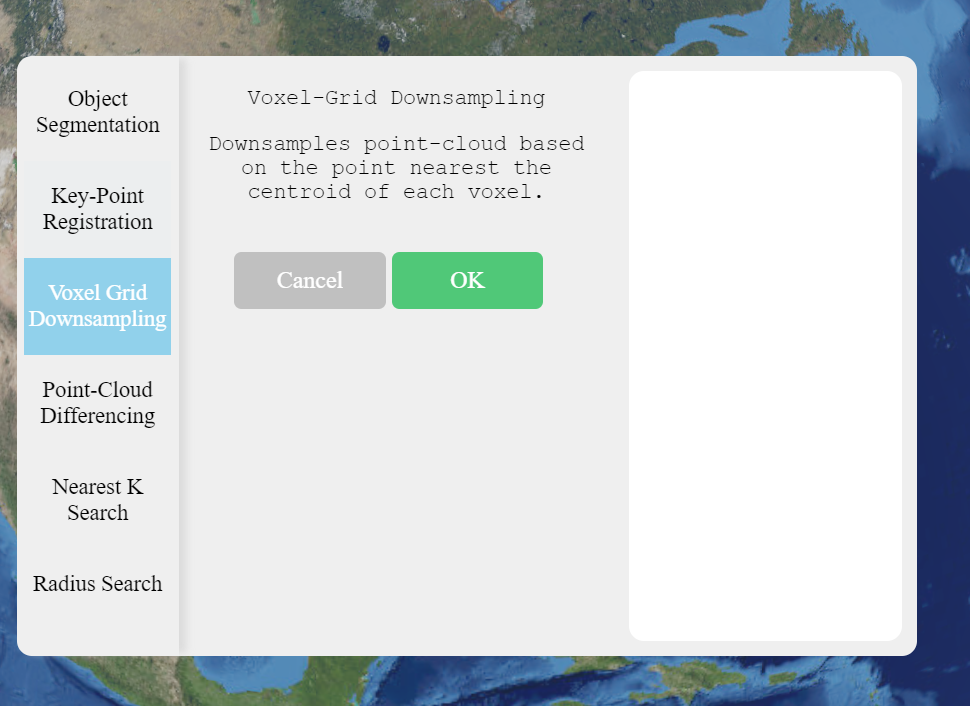

- Created a comprehensive in-house library of algorithms for point-cloud processing, mesh processing, and 3D geometry processing tasks.

- Implemented cutting-edge techniques such as 3D temporal differencing, point-cloud segmentation, Kd-tree & octree creation, level-of-detail (LOD) operations, reconstruction, texture-mapping, and ray-tracing.

- Utilized and expanded upon industry-standard libraries like Point Cloud Library (PCL) and Cloud Compare Core Library (CCCoreLib) to enhance our processing capabilities.

Machine Learning

Shadow Removal/Detection

I led the research team in developing machine learning approaches for shadow removal and detection in aerial imagery:- Designed and implemented neural network architectures specifically tailored for identifying and eliminating shadows in various lighting conditions.

- Developed a novel algorithm that combined spectral and spatial information to accurately differentiate between shadows and actual dark objects in the scene.

- Created a large-scale dataset of aerial images with annotated shadows to train and validate our models.

Super-Resolution

I led the development of super-resolution techniques for both single-image and multi-image scenarios:- Implemented and fine-tuned deep learning models such as SRCNN, ESRGAN, and DBPN for single-image super-resolution.

- Developed a novel multi-image super-resolution approach that leveraged temporal information from video sequences to achieve unprecedented levels of detail.

- Optimized our super-resolution pipeline for real-time processing, enabling on-the-fly enhancement of live video feeds.

3D Viewing Software

I took the lead in designing and developing a web-based UI for viewing and interacting with 3D mesh and point-cloud data:- Utilized Chromium Embedded Framework (CEF) to create a high-performance, cross-platform application that combined the power of C++ with the flexibility of JavaScript.

- Implemented advanced rendering techniques to handle large-scale 3D datasets efficiently, including level-of-detail (LOD) management and out-of-core rendering.

- Designed an intuitive user interface that allowed for seamless navigation, measurement, and annotation of 3D models.

- Leveraged CesiumJS to incorporate accurate 3D representations of global terrain features:

- - Implemented high-resolution digital elevation models (DEMs) from CesiumJS to render realistic mountains, valleys, and other topographical features.

- - Utilized Cesium's globe rendering capabilities to seamlessly integrate our custom 3D models with worldwide terrain data.

- - Optimized the integration of large-scale terrain data with our own 3D models to maintain high performance across various zoom levels and viewpoints.

- Implemented the Live3D relighting system within the 3D viewer:

- - Developed a robust integration system to import our custom 3D meshes onto the Cesium terrain at precise geographic locations.

- - Created a real-time image projection pipeline that could handle multiple live feeds from planes, drones, and other aerial platforms.

- - Implemented advanced GPU-based rendering techniques to ensure smooth performance even with high-resolution imagery and complex 3D models.

- - Developed a user-friendly interface for controlling and adjusting the camera view, connecting to streaming sources, sending macros to aerial platforms, and viewing metadata about incoming frames, meshes, etc.

Project Overview

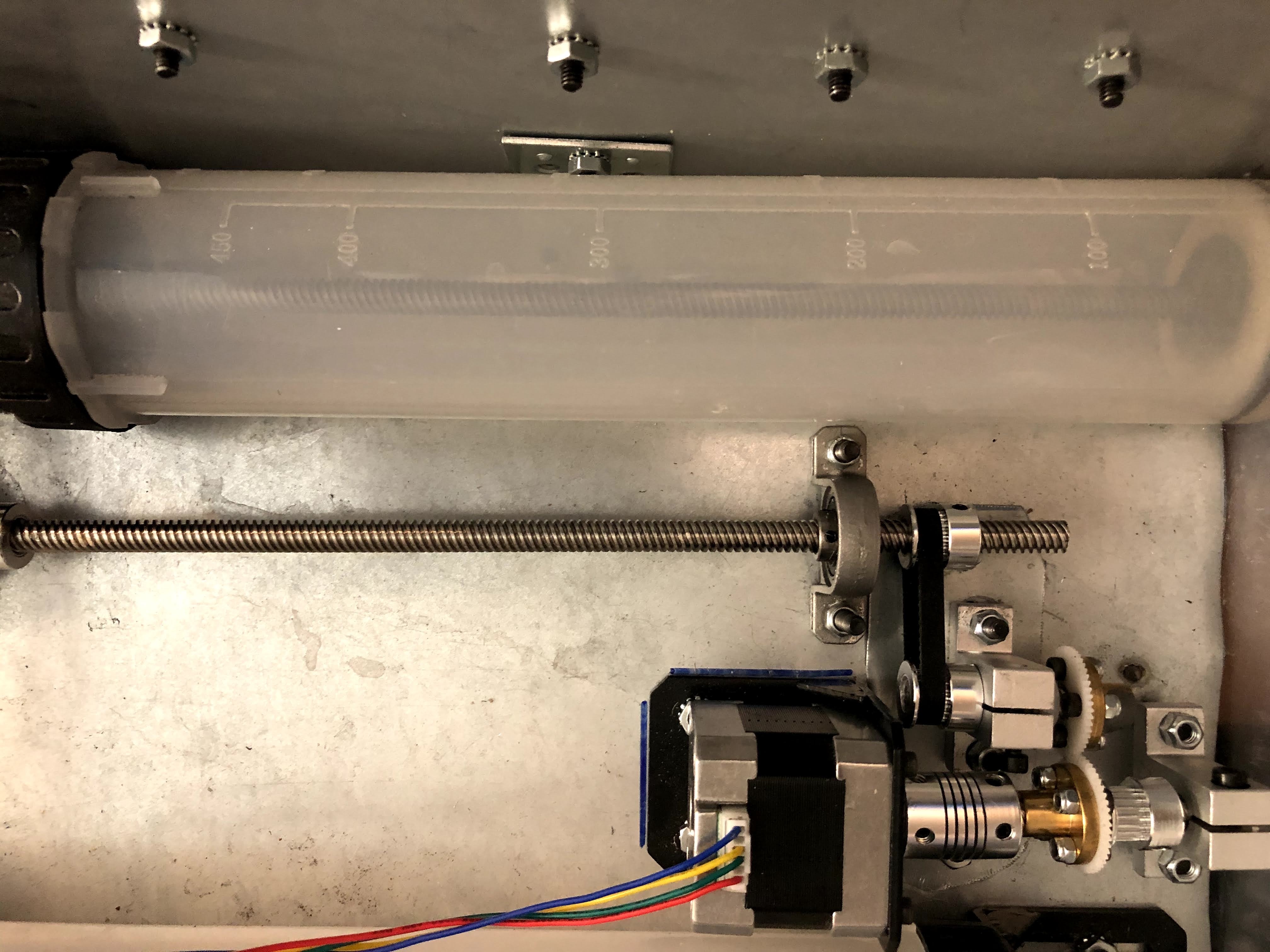

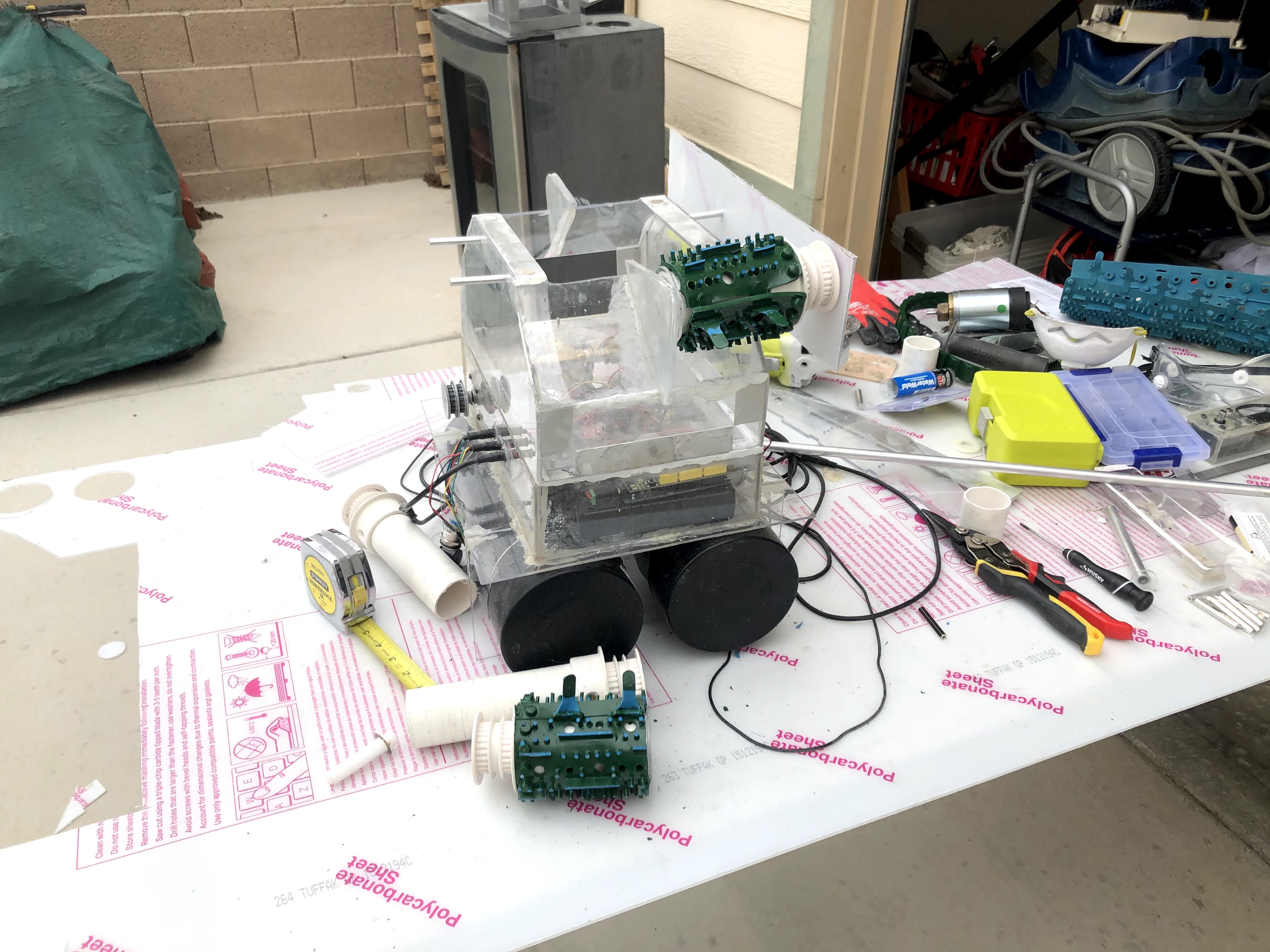

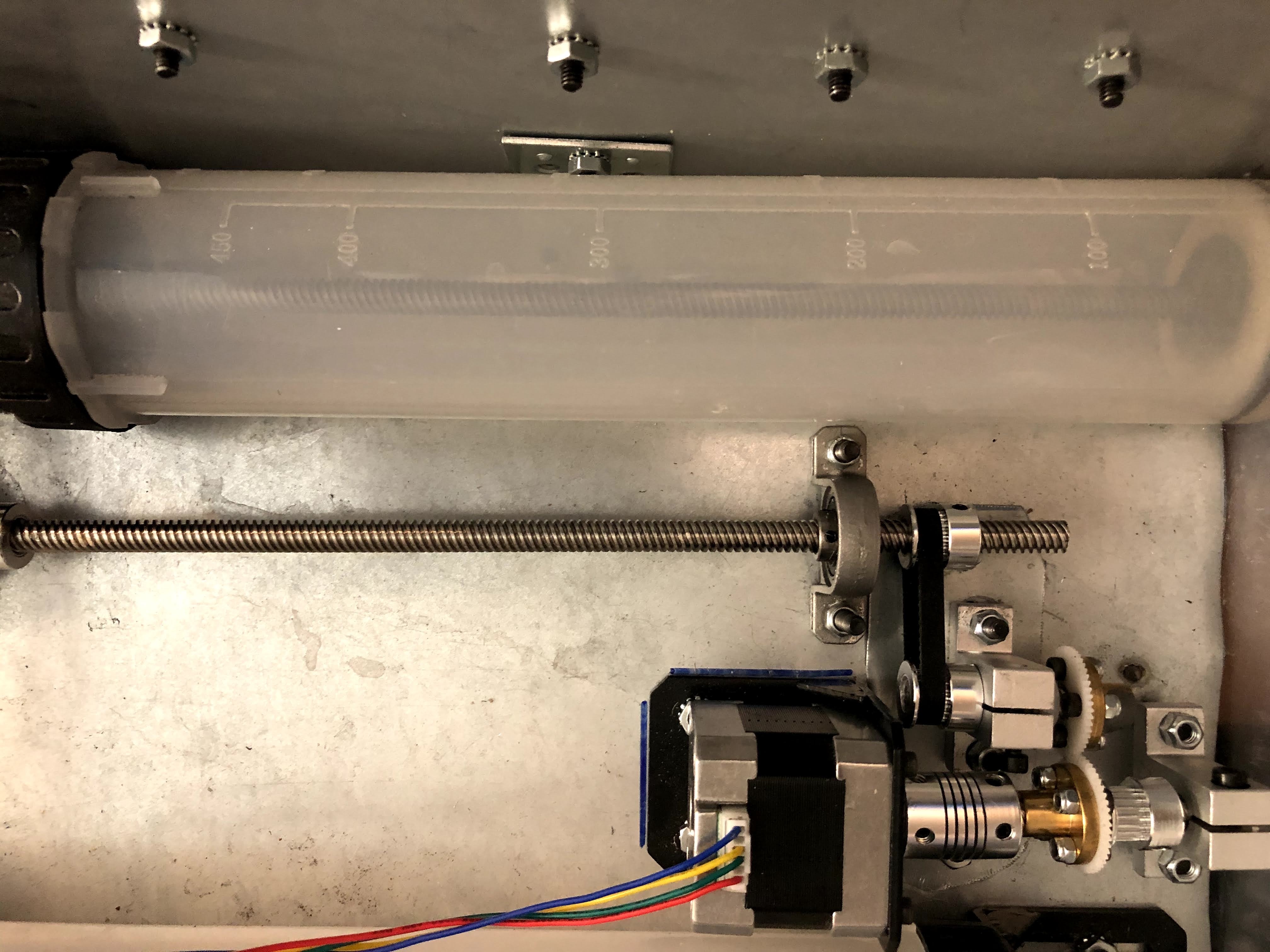

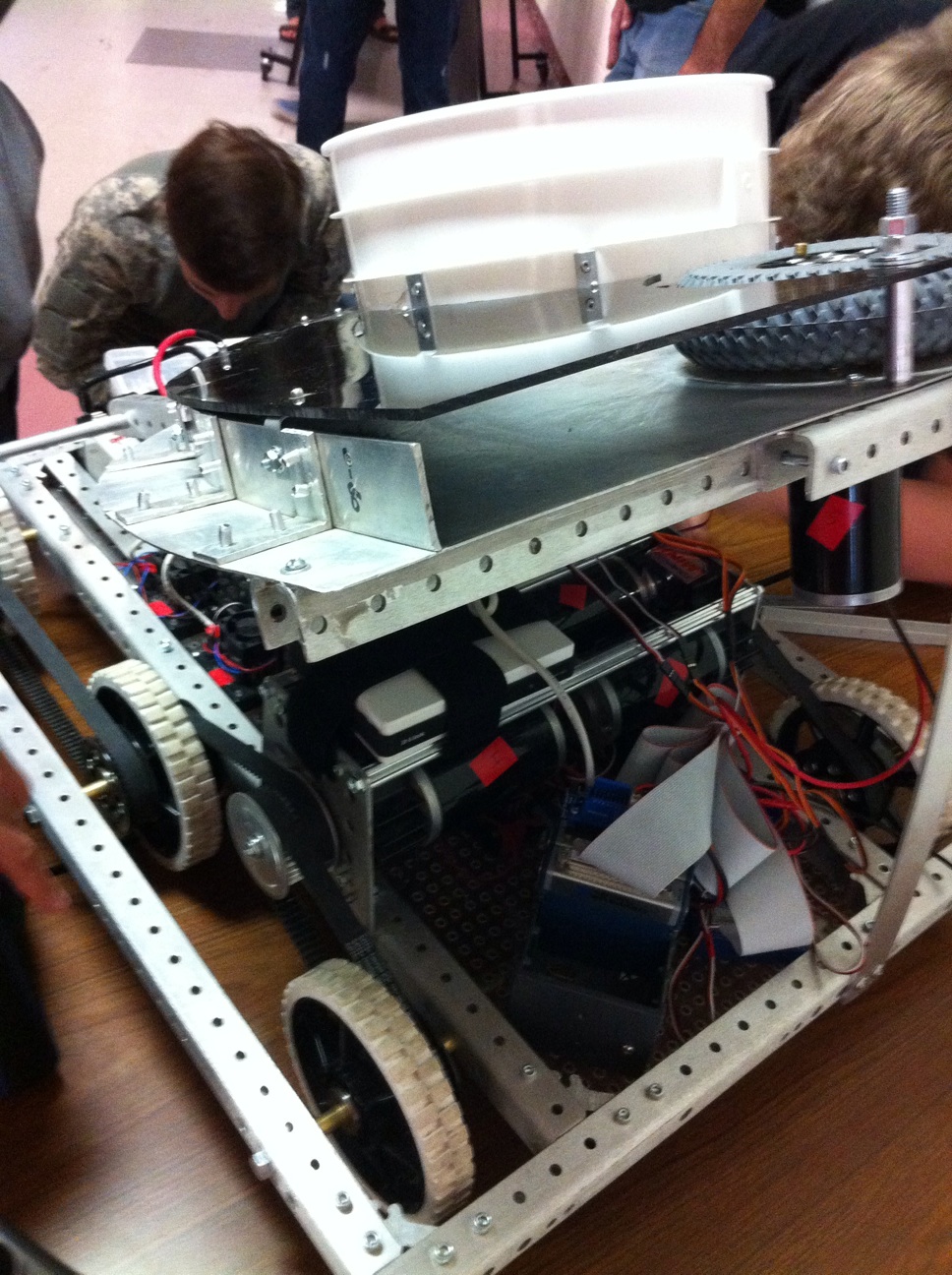

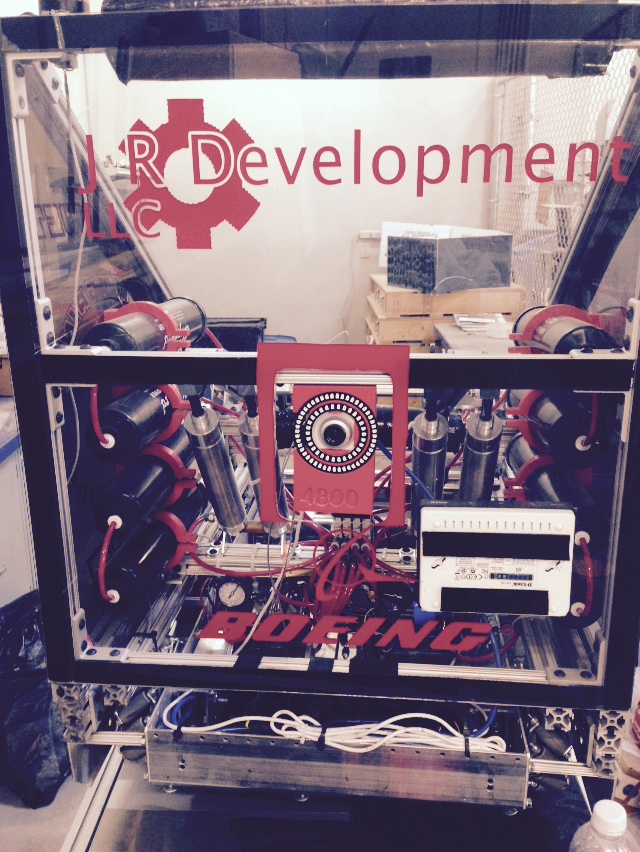

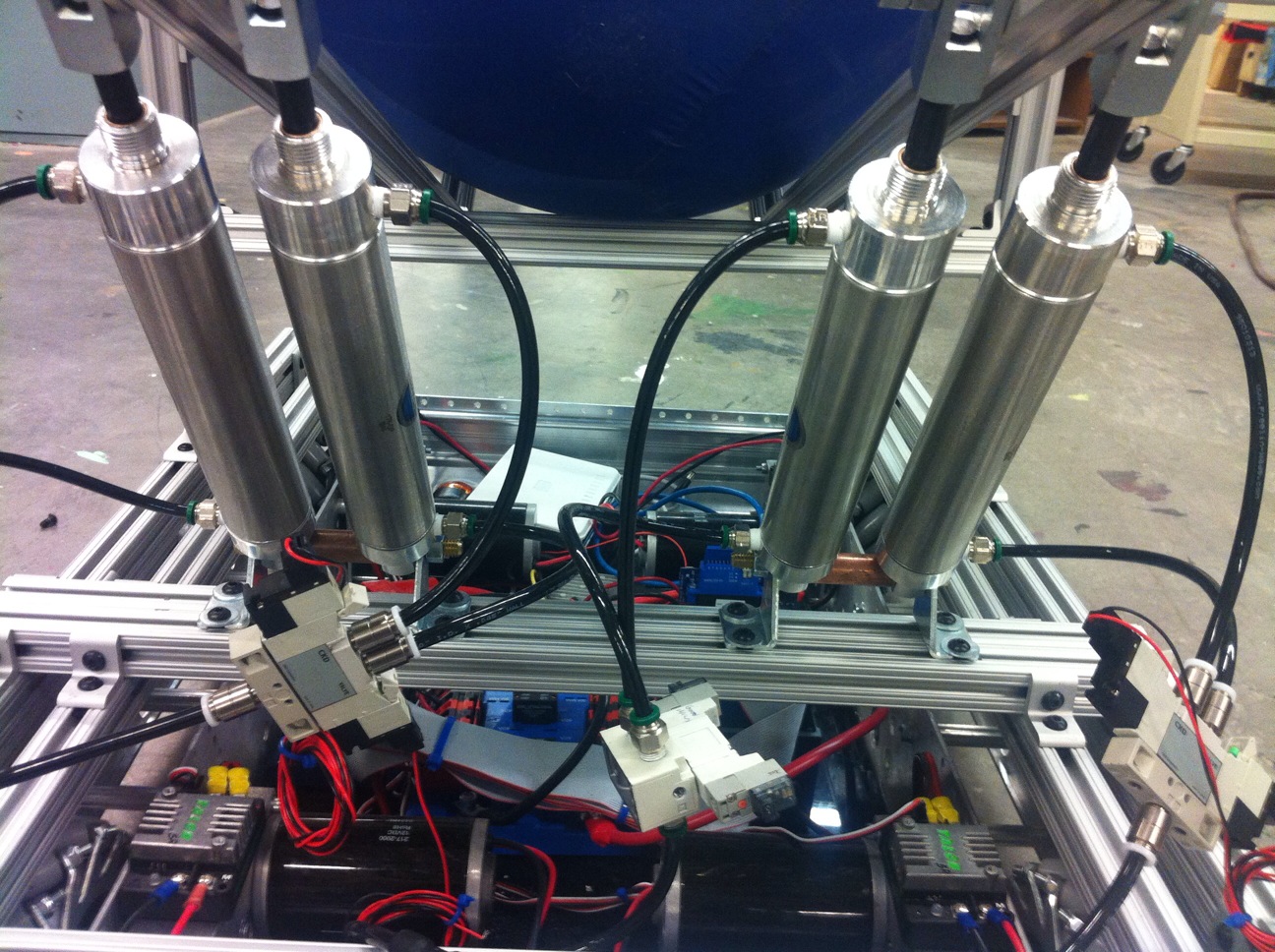

I set out to design a robot capable of mapping a pool, cleaning both its surface and bottom, all while being entirely powered by solar energy. My father's previous pool robots were limited in functionality: they could either skim the surface and remove debris or navigate the bottom to clean the sides and floor. Each of these robots cost over $1,000, so I aimed to create a device that could perform both tasks for less than that amount. I began by considering how to create a robot with sufficient buoyancy to float on the surface while also having the ability to descend to the bottom without expending excessive energy. My initial concept involved using ballast tanks that would intake water for descent and expel it when the robot needed to charge via its solar panel or skim the surface.V2: Dual-Cylinder Monopump Ballast System

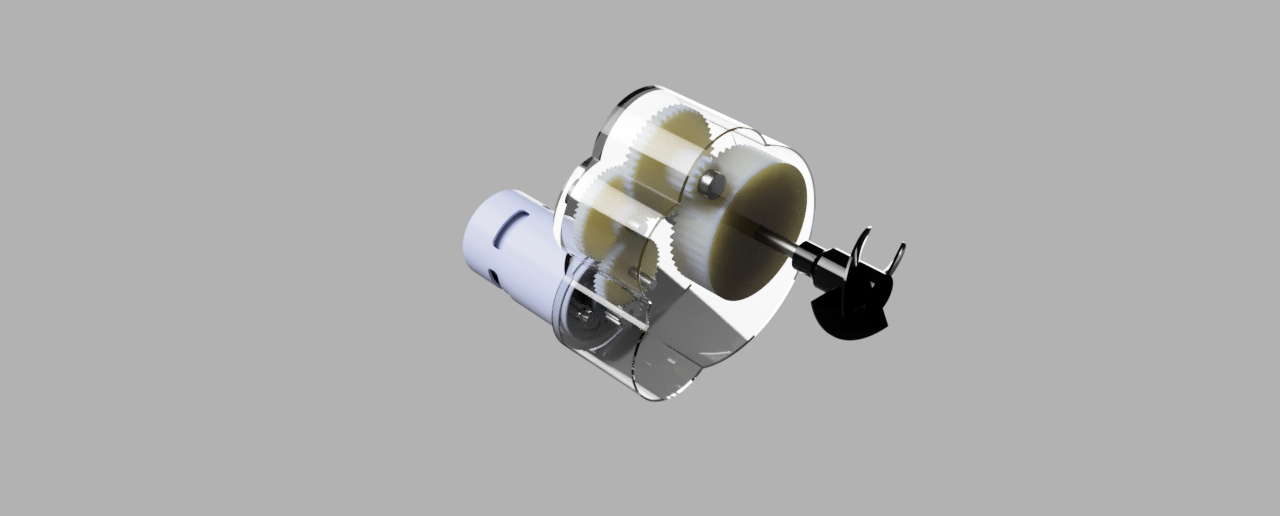

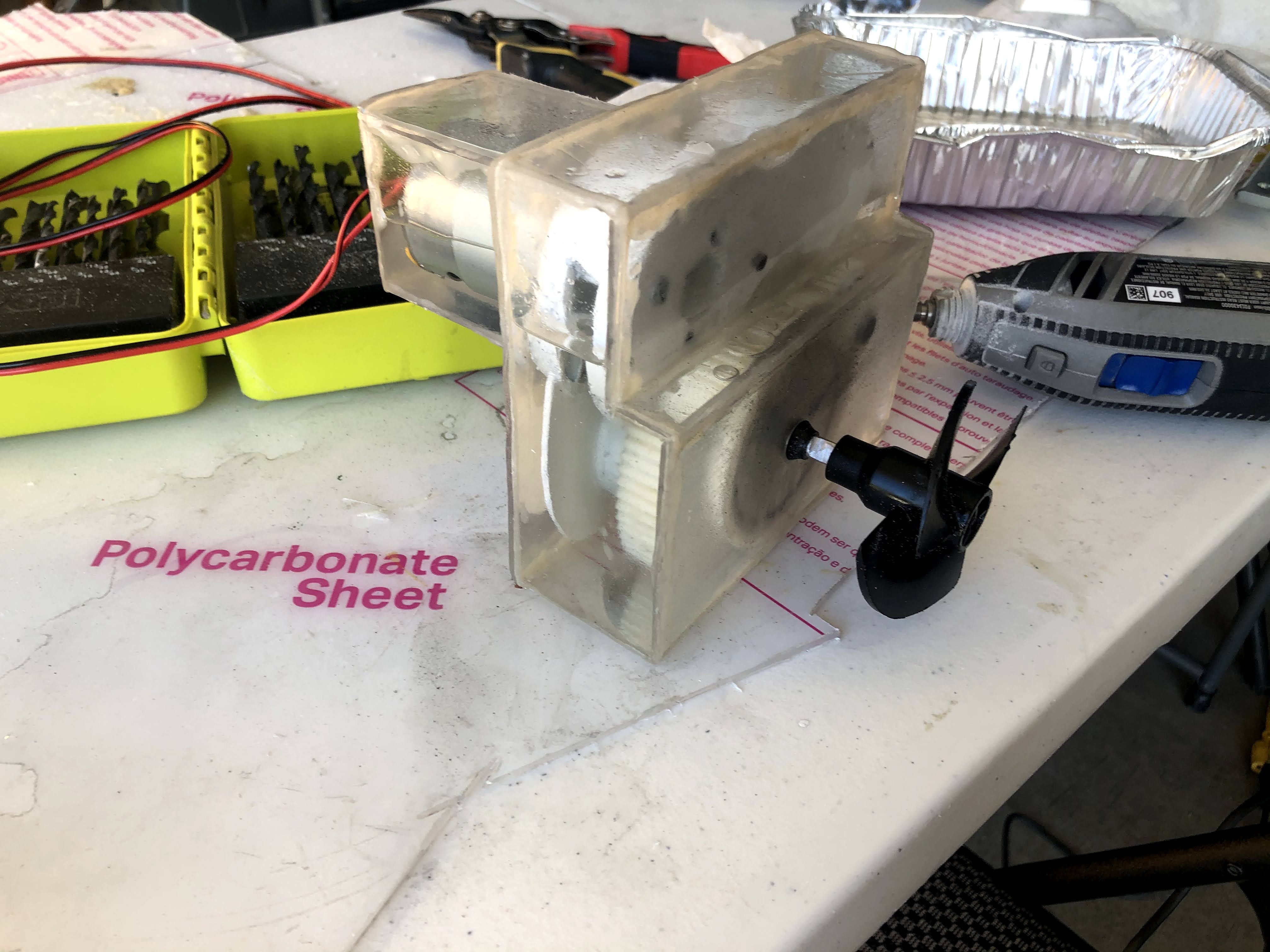

To address the failures of the previous ballast design, I implemented two cylindrical ballast tanks using pipes and endcaps sourced from Home Depot. This configuration mitigated the pressure-related issues encountered with the rectangular design. The peristaltic pump was retained, with its output tube bifurcated to fill both tanks simultaneously. I then incorporated the bottom components: agitators for dislodging debris from the pool floor, treads for locomotion along the pool bottom, and a debris container. These components are visible in the first two photographs. Subsequently, I added side panels to house the ultrasonic sensors, as shown in the third photograph. The pool mapping algorithm utilizes solely ultrasonic sensors and operates as follows: The robot initiates its descent in 1-foot increments, gradually filling the ballast tanks while monitoring depth via pressure sensors. At each level, it emits ultrasonic pulses from its sensor array to collect distance data. The robot then executes a 360-degree rotation to comprehensively capture pool edge information. The software maintains a 3D grid representation of the pool, updating it with each set of sensor readings. As the robot descends and traverses horizontally, it constructs a detailed model of the pool's geometry. The mapping algorithm designates grid cells as either empty or occupied based on sensor data, employing probabilistic updates to manage noise. After surveying the entire pool volume, the collected data undergoes noise reduction and interpolation processes. Finally, a 3D surface reconstruction algorithm generates a mesh model of the pool, which can be exported in a standard 3D file format. To provide propulsion and facilitate water suction into the debris container, I designed and 3D printed a custom gear train driven by a 12V DC brushed motor. To protect this critical component from water ingress, I engineered a fluid-filled container to house the motor and gear assembly. This approach offered several significant advantages:- Waterproofing: The fluid-filled enclosure acts as an effective barrier, preventing water from infiltrating and damaging the motor's electrical components and the metal parts of the gearset.

- Pressure equalization: In a submarine environment, external water pressure increases with depth. The fluid inside the container helps equalize this pressure, preventing deformation or damage to the motor housing and internal components. This ensures consistent performance across various depths.

- Lubrication: The fluid serves as a constant lubricant for the gears and motor bearings, significantly reducing friction and wear. This is particularly important in a compact, sealed system where regular maintenance is challenging or impossible during operation.

- Heat dissipation: Motors generate heat during operation, which can be detrimental in a sealed environment. The surrounding fluid efficiently conducts heat away from the motor and gears, distributing it more evenly and potentially transferring it to the outer casing. This casing then acts as a heat exchanger with the surrounding water, maintaining optimal operating temperatures.

- Buoyancy adjustment: It can play a role in fine-tuning the buoyancy of the propulsion unit or the overall submarine. This allows for precise control over the robot's position and movement in the water.

- Flow rate limitations: Peristaltic pumps typically offer lower flow rates compared to alternative pump types, potentially leading to slower ballast adjustments. This could be critical for the submarine's operational efficiency.

- Pressure constraints: These pumps generally operate at lower pressures, which may prove insufficient at greater depths.

- Tube degradation: The flexible tube in the pump is subject to wear over time, necessitating periodic replacement.

- Power efficiency: Peristaltic pumps are not as efficient as other pump types for moving large volumes of water, impacting the robot's overall energy consumption.

- As water was pumped into the ballast tanks, the air inside compressed according to Boyle's Law, creating increasing back-pressure against the incoming water.

- This compression affected the system in several ways: a) The pump had to work harder to overcome the increasing resistance. b) Compressed air still provides some buoyancy, complicating the achievement of desired negative buoyancy for submersion. c) The compressed air expands or contracts with depth changes, causing unexpected buoyancy shifts. d) The increasing back-pressure stressed the pump, reducing its efficiency and lifespan.

- These issues led to incomplete tank filling, unpredictable buoyancy behavior, and decreased energy inefficiency.

Project Overview

I set out to design a robot capable of mapping a pool, cleaning both its surface and bottom, all while being entirely powered by solar energy. My father's previous pool robots were limited in functionality: they could either skim the surface and remove debris or navigate the bottom to clean the sides and floor. Each of these robots cost over $1,000, so I aimed to create a device that could perform both tasks for less than that amount. I began by considering how to create a robot with sufficient buoyancy to float on the surface while also having the ability to descend to the bottom without expending excessive energy. My initial concept involved using ballast tanks that would intake water for descent and expel it when the robot needed to charge via its solar panel or skim the surface.V1: Monopump Rectangular Ballast System

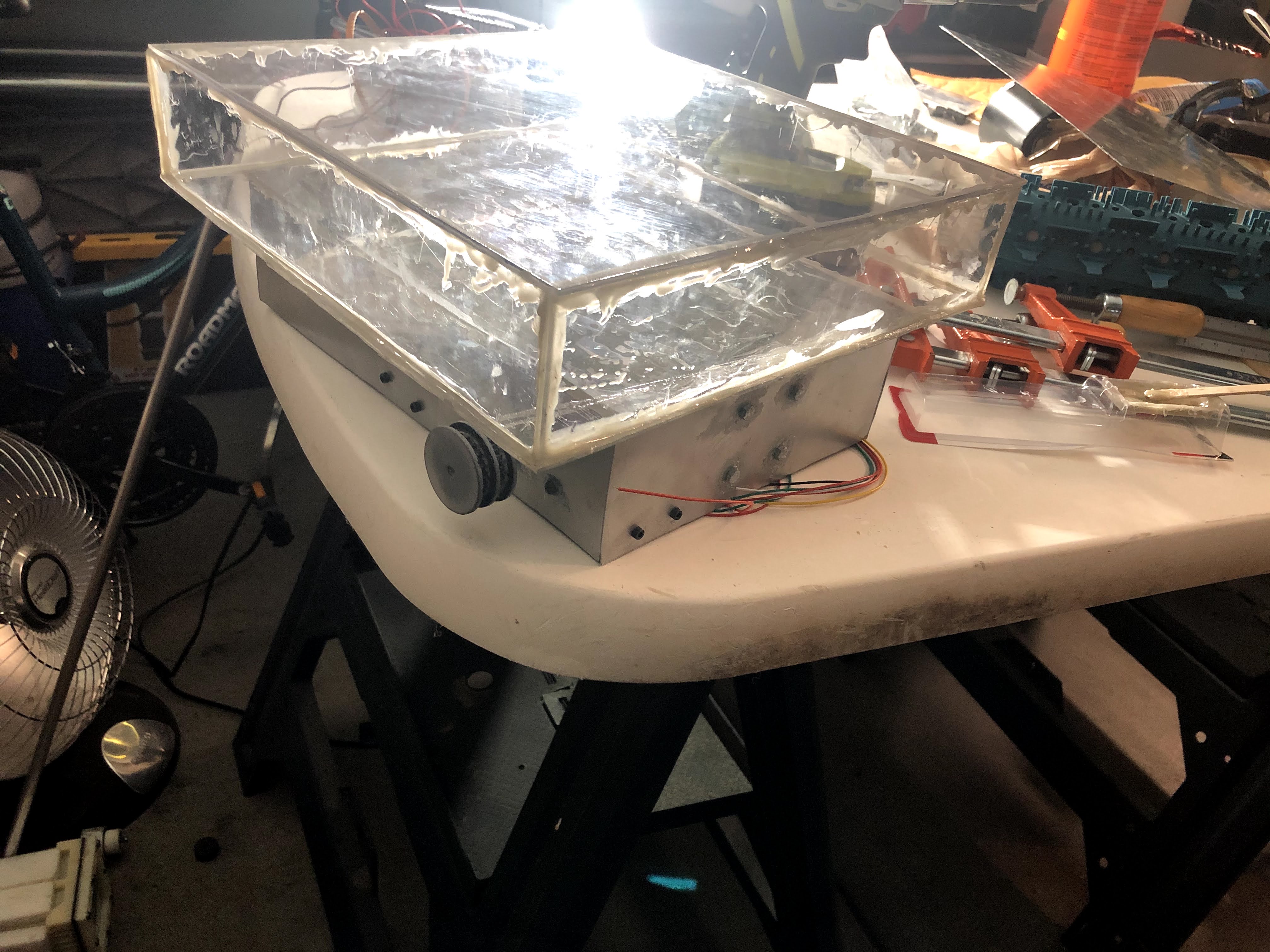

This new design aimed to significantly reduce the number of moving parts and associated costs compared to the previous piston ballast system. To achieve this, I constructed a rectangular tank using polycarbonate sheets from Home Depot, cut and epoxied together. I then incorporated a peristaltic pump, selected for its self-priming capability and reversible operation. Peristaltic pumps can handle air in the line without losing prime, making them ideal for intermittent water pumping applications. The pump's reversibility allows for both water intake and expulsion using a single mechanism. The system's intake was fitted with a filter on the robot's side panel, while the output tube fed directly into the rectangular ballast tank. To enable navigation and obstacle avoidance, I waterproofed ultrasonic sensors and integrated them into the robot's side panel. All electronic components were housed in a custom-built, openable waterproof container. Using data from the ultrasonic sensors, I developed an algorithm for obstacle detection and avoidance. To address the cleaning functionality, I 3D printed several timing pulleys. These pulleys were driven on each side by two 24V DC brushed motors, which in turn drove a belt connected to another timing pulley. This secondary pulley actuated brushes designed to disrupt debris at the pool bottom, allowing it to be sucked into the debris container. This mechanism ensured efficient cleaning while minimizing power consumption through the use of geared transmissions. Progressing through multiple design iterations provided valuable insights into hydrodynamics, material properties, and system integration. Key learnings included the importance of fluid dynamics in underwater robotics, the challenges of waterproofing electronic components, and the critical nature of efficient power management in solar-powered systems. One significant failure encountered was the inadequacy of the rectangular shape in handling large forces. Rectangular structures are inherently weak at managing high pressures due to stress concentration at the corners. Unlike curved surfaces that distribute force evenly, the sharp angles of a rectangle create weak points where material failure is likely to initiate. Additionally, flat surfaces tend to deflect under pressure, potentially leading to seal failures or structural deformation over time. This experience underscored the importance of considering pressure distribution and structural integrity in underwater design, pointing towards the superiority of cylindrical or spherical shapes for pressure vessels in aquatic applications, which would be used in my next version.

Project Overview

I set out to design a robot capable of mapping a pool, cleaning both its surface and bottom, all while being entirely powered by solar energy. My father's previous pool robots were limited in functionality: they could either skim the surface and remove debris or navigate the bottom to clean the sides and floor. Each of these robots cost over $1,000, so I aimed to create a device that could perform both tasks for less than that amount. I began by considering how to create a robot with sufficient buoyancy to float on the surface while also having the ability to descend to the bottom without expending excessive energy. My initial concept involved using ballast tanks that would intake water for descent and expel it when the robot needed to charge via its solar panel or skim the surface.V0: Piston Ballast Design

My first design for a ballast tank utilized piston ballasts. A piston ballast consists of a cylinder with a movable piston inside. As the piston moves, it either creates space for water to enter or forces water out, allowing the robot to control its buoyancy. To reduce costs, each piston ballast incorporated a timing pulley driven by a single servo motor to intake or expel water. However, these proved challenging to construct independently and often leaked water through the piston disk, causing issues with submersion and resurfacing. The video above demonstrates one of the failed tests using the piston ballast system. The piston ballast design proved to be fragile and prone to failure. Seal issues frequently arose, allowing air to enter and disrupt the suction process. Mechanical problems, such as misalignment or debris interfering with the piston's movement, were common. The system often struggled to create sufficient vacuum to draw in water effectively, especially at greater depths. Additionally, the intake ports were susceptible to blockages from pool debris, further hampering the ballast's functionality. The video above demonstrates one of the failed tests using the piston ballast system, highlighting the design's inherent vulnerabilities and the challenges faced in achieving reliable performance. To test the submarine's performance without initially worrying about sealing the electronics, I used a waterproof container to house the electronics and ran a long wire from the top to the sealed motors. This setup allowed me to focus on the core functionality while isolating sensitive components from water exposure. The last photo in the carousel above shows the waterproof container used for this purpose. I eventually moved on to a new design, as the fragility of this initial approach proved troublesome for long-term reliability—an essential factor when developing a product for consumer use.

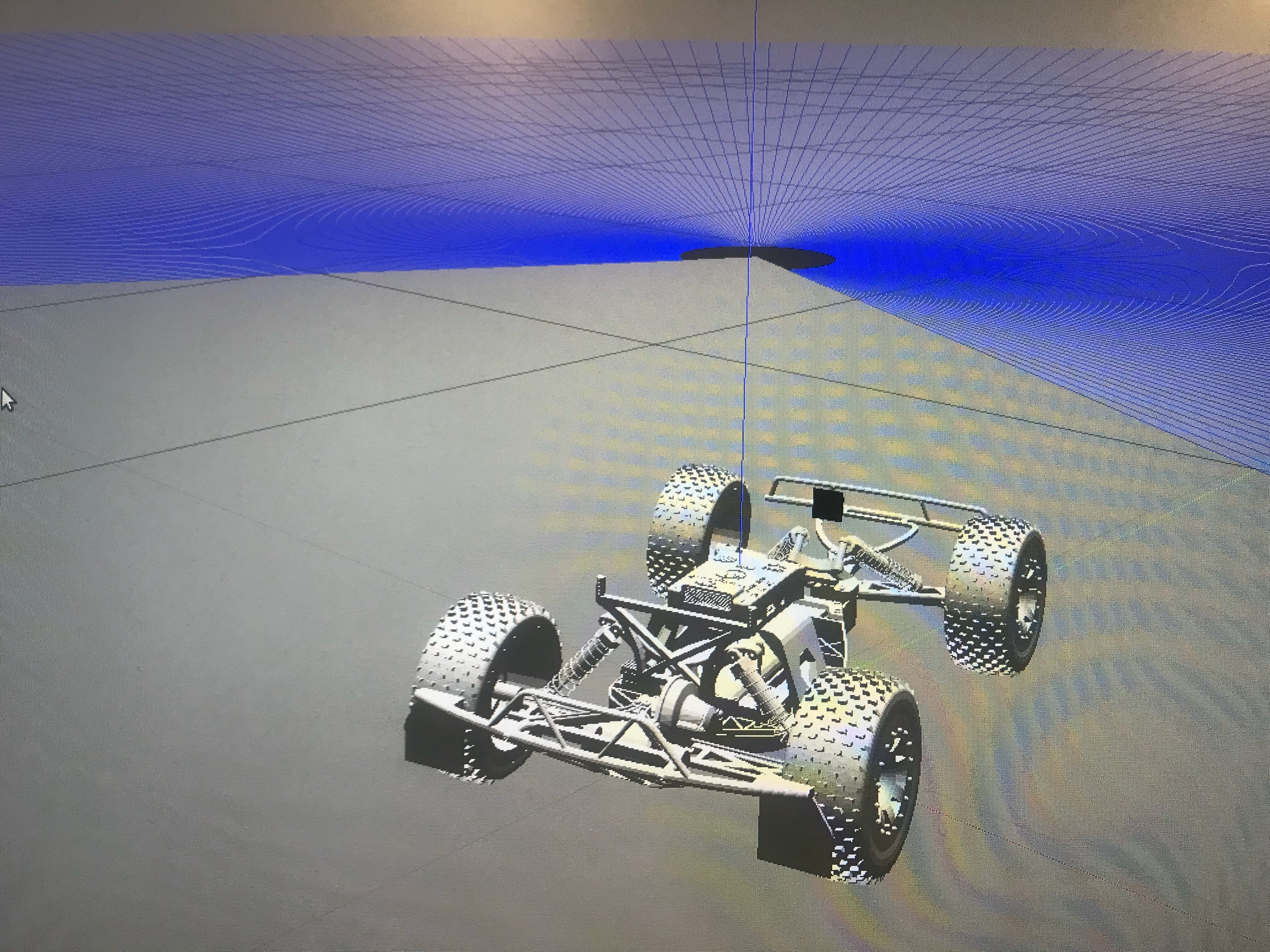

Project Overview

This project implements an autonomous rover for the NASA Sample Return Challenge using Python. The rover is designed to navigate and map its environment, identify and collect rock samples, and return to its starting location.

Key Features

The rover demonstrates capabilities in autonomous navigation and mapping, allowing it to explore unknown terrains efficiently. It employs sophisticated obstacle avoidance techniques to ensure safe traversal through challenging environments. A key aspect of its mission is the ability to identify and collect rock samples, showcasing its potential for scientific exploration. The return-to-start functionality ensures that the rover can complete its mission cycle by returning collected samples to the designated starting point.

Technical Implementation

Perception

The rover's perception system utilizes a perspective transform to generate a top-down view of its surroundings, providing a clear understanding of the terrain layout. Color thresholding techniques are employed to distinguish between navigable terrain, obstacles, and potential rock samples. The system performs both rover-centric and world-centric coordinate transformations, enabling accurate localization and mapping.

Decision Making

The decision-making algorithm incorporates several features to ensure robust performance. It includes stuck detection and recovery mechanisms, allowing the rover to extricate itself from challenging situations. Circular motion detection and correction prevent the rover from getting trapped in repetitive patterns. The navigation system is goal-oriented, efficiently guiding the rover towards objectives while maintaining a comprehensive tracking system for sample collection.

Performance

The rover achieves an average mapping coverage of approximately 94% of its environment. The system maintains a fidelity of around 80%, ensuring reliable operation and accurate data collection throughout its mission.

Future Improvements

Future development efforts will focus on increasing the overall system fidelity, pushing beyond the current 80% benchmark. Implementation of map boundaries is planned to enable more efficient exploration strategies. Additionally, optimizing the time taken for exploration and sample collection will be a key area of improvement, enhancing the rover's efficiency in completing its objectives.

GitHub Repository

For more details and access to the code, visit the GitHub repository.

- A script for sending and receiving data packets between modules

- Tests to check signal strength (as demonstrated in the video above)

- Control loops based on the received signal strength

- Area navigation

- Object detection

- Manipulation

#include <iostream>

#include <vector>

#include <cmath>

#include <chrono>

#include <thread>

#include <rec/robotino/api2/all.h>

#include <boost/asio.hpp>

struct Point

{

double x, y;

Point(double x = 0, double y = 0) : x(x), y(y) {}

};

class HokuyoURG

{

private:

boost::asio::io_service io;

boost::asio::serial_port serial;

std::vector<uint32_t> distances;

public:

HokuyoURG(const std::string &port, unsigned int baud_rate)

: serial(io, port)

{

serial.set_option(boost::asio::serial_port_base::baud_rate(baud_rate));

}

bool initialize()

{

write("SCIP2.0\n");

std::string response = read();

return response.find("SCIP2.0") != std::string::npos;

}

std::vector<Point> scan()

{

write("GD0000108000\n"); // Request scan from step 0 to step 1080

std::string response = read();

std::vector<Point> scan_points;

// Check if the response starts with "GD"

if (response.substr(0, 2) != "GD")

{

std::cerr << "Invalid response from LIDAR" << std::endl;

return scan_points;

}

// Skip header (first two lines)

size_t data_start = response.find('\n');

data_start = response.find('\n', data_start + 1);

data_start++; // Move past the newline

// Parse the data

const double STEP_ANGLE = 0.25 * M_PI / 180.0; // 0.25 degrees in radians

const double START_ANGLE = -135.0 * M_PI / 180.0; // -135 degrees in radians

for (size_t i = 0; i < 1081; ++i)

{

if (data_start + 3 > response.length())

break;

// Extract 3 characters for each measurement

std::string encoded_dist = response.substr(data_start, 3);

data_start += 3;

// Decode the distance

int distance = (encoded_dist[0] - 0x30) << 12 | (encoded_dist[1] - 0x30) << 6 | (encoded_dist[2] - 0x30);

// Skip invalid measurements

if (distance == 0)

continue;

// Convert polar coordinates to Cartesian

double angle = START_ANGLE + i * STEP_ANGLE;

double x = distance * cos(angle) / 1000.0; // Convert to meters

double y = distance * sin(angle) / 1000.0; // Convert to meters

scan_points.emplace_back(x, y);

}

return scan_points;

}

private:

void write(const std::string &data)

{

boost::asio::write(serial, boost::asio::buffer(data));

}

std::string read()

{

char c;

std::string result;

while (boost::asio::read(serial, boost::asio::buffer(&c, 1)))

{

if (c == '\n')

break;

result += c;

}

return result;

}

};

class RobotinoRobot : public rec::robotino::api2::Com

{

private:

rec::robotino::api2::OmniDrive omniDrive;

rec::robotino::api2::Odometry odometry;

HokuyoURG *lidar;

Point goal;

std::vector<Point> obstacles;

double attractiveConst = 1.0;

double repulsiveConst = 100.0;

double influenceRange = 0.5; // in meters

double maxVelocity = 0.2; // in m/s

public:

RobotinoRobot(const std::string &hostname, Point goal, HokuyoURG *lidar)

: goal(goal), lidar(lidar)

{

setAddress(hostname.c_str());

}

void updateLidarData()

{

obstacles = lidar->scan();

}

Point calculateAttractivePotential()

{

float x, y, phi;

odometry.readings(&x, &y, &phi);

double dx = goal.x - x;

double dy = goal.y - y;

double distance = std::sqrt(dx * dx + dy * dy);

return Point(attractiveConst * dx / distance, attractiveConst * dy / distance);

}

Point calculateRepulsivePotential()

{

Point total(0, 0);

float robot_x, robot_y, robot_phi;

odometry.readings(&robot_x, &robot_y, &robot_phi);

for (const auto &obstacle : obstacles)

{

double dx = robot_x - obstacle.x;

double dy = robot_y - obstacle.y;

double distance = std::sqrt(dx * dx + dy * dy);

if (distance < influenceRange)

{

double magnitude = repulsiveConst * (1 / distance - 1 / influenceRange) / (distance * distance);

total.x += magnitude * dx / distance;

total.y += magnitude * dy / distance;

}

}

return total;

}

void move()

{

updateLidarData();

Point attractive = calculateAttractivePotential();

Point repulsive = calculateRepulsivePotential();

double vx = attractive.x + repulsive.x;

double vy = attractive.y + repulsive.y;

// Normalize and scale velocity

double magnitude = std::sqrt(vx * vx + vy * vy);

if (magnitude > maxVelocity)

{

vx = (vx / magnitude) * maxVelocity;

vy = (vy / magnitude) * maxVelocity;

}

// Robotino uses a different coordinate system, so we need to adjust

omniDrive.setVelocity(vy, -vx, 0); // x and y are swapped, and x is negated

float x, y, phi;

odometry.readings(&x, &y, &phi);

std::cout << "Robot at (" << x << ", " << y << "), φ = " << phi << std::endl;

}

bool reachedGoal()

{

float x, y, phi;

odometry.readings(&x, &y, &phi);

double dx = goal.x - x;

double dy = goal.y - y;

return std::sqrt(dx * dx + dy * dy) < 0.1;

}

void errorEvent(const char *errorString) override

{

std::cerr << "Error: " << errorString << std::endl;

}

void connectedEvent() override

{

std::cout << "Connected to Robotino" << std::endl;

}

void connectionClosedEvent() override

{

std::cout << "Connection to Robotino closed" << std::endl;

}

};

int main()

{

HokuyoURG lidar("/dev/ttyACM0", 115200);

if (!lidar.initialize())

{

std::cerr << "Failed to initialize LIDAR" << std::endl;

return 1;

}

RobotinoRobot robot("172.26.1.1", Point(10, 15), &lidar); // Replace IP with your Robotino's IP

if (!robot.connect())

{

std::cerr << "Failed to connect to Robotino" << std::endl;

return 1;

}

while (!robot.reachedGoal())

{

robot.move();

std::this_thread::sleep_for(std::chrono::milliseconds(100)); // 10 Hz update rate

}

robot.omniDrive.setVelocity(0, 0, 0); // Stop the robot

std::cout << "Goal reached!" << std::endl;

robot.disconnect();

return 0;

}

- creating an outdoor area for events, socialization, and dining

- incorporating sustainable design elements

- combining both retail and housing within the structure

- Tabletop

- Backsplash

- Legs

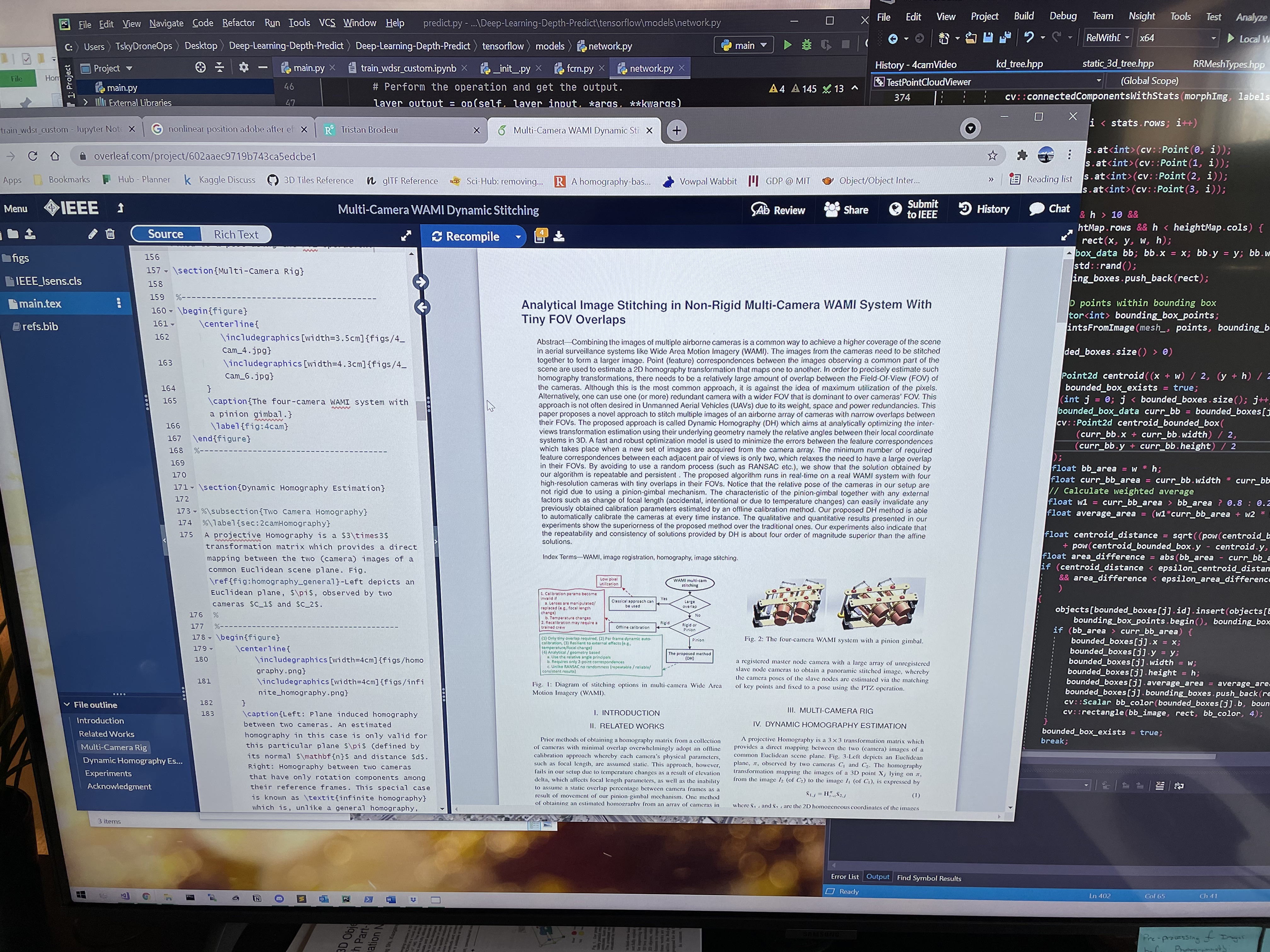

Analytical Image Stitching in Non-Rigid Multi-Camera WAMI

IEEE Sensors Letters

Issue 3 • March-2022

Montreal, Canada (Virtual)

H. AliAkbarpour, T. Brodeur, S. Suddarth

Abstract: Combining the images of multiple airborne cameras is a common way to achieve a higher scene coverage in Wide Area Motion Imagery (WAMI). This paper proposes a novel approach, named Dynamic Homography (DH), to stitch multiple images of an airborne array of ’non-rigid’ cameras with ’narrow’ overlaps between their FOVs. It estimates the inter-views transformations using their underlying geometry namely the relative angles between their local coordinate systems in 3D. A fast and robust optimization model is used to minimize the errors between the feature correspondenceswhich takes place when a new set of images are acquired from the camera array. The minimum number of required feature correspondences between each adjacent pair of views is only two, which relaxes the need to have a large overlapin their FOVs. Our quantitative and qualitative experiments show the superiority of the proposed method compared to the traditional ones.

Point Cloud Object Segmentation Using Multi Elevation-Layer 2D Bounding-Boxes

ICCV Workshop on Analysis of Aerial Motion Imagery (WAAMI)

2021

Montreal, Canada (Virtual)

T. Brodeur, H. AliAkbarpour, S. Suddarth

Abstract: Segmentation of point clouds is a necessary pre-processing technique when object discrimination is neededfor scene understanding. In this paper, we propose a seg-mentation technique utilizing 2D bounding-box data ob-tained via the orthographic projection of 3D points onto aplane at multiple elevation layers. Connected componentsis utilized to obtain bounding-box data, and a consistencymetric between bounding-boxes at various elevation layershelps determine the classification of the bounding-box toan object of the scene. The merging of point data withineach 2D bounding-box results in an object-segmented pointcloud. Our method conducts segmentation using only thetopological information of the point data within a dataset,requiring no extra computation of normals, creation of anoctree or k-d tree, nor a dependency on RGB or intensitydata associated with a point. Initial experiments are run ona set of point cloud datasets obtained via photogrammet-ric means, as well as some open-source, LIDAR-generatedpoint clouds, showing the method to be capture agnostic.Results demonstrate the efficacy of this method in obtaininga distinct set of objects contained within a point cloud.

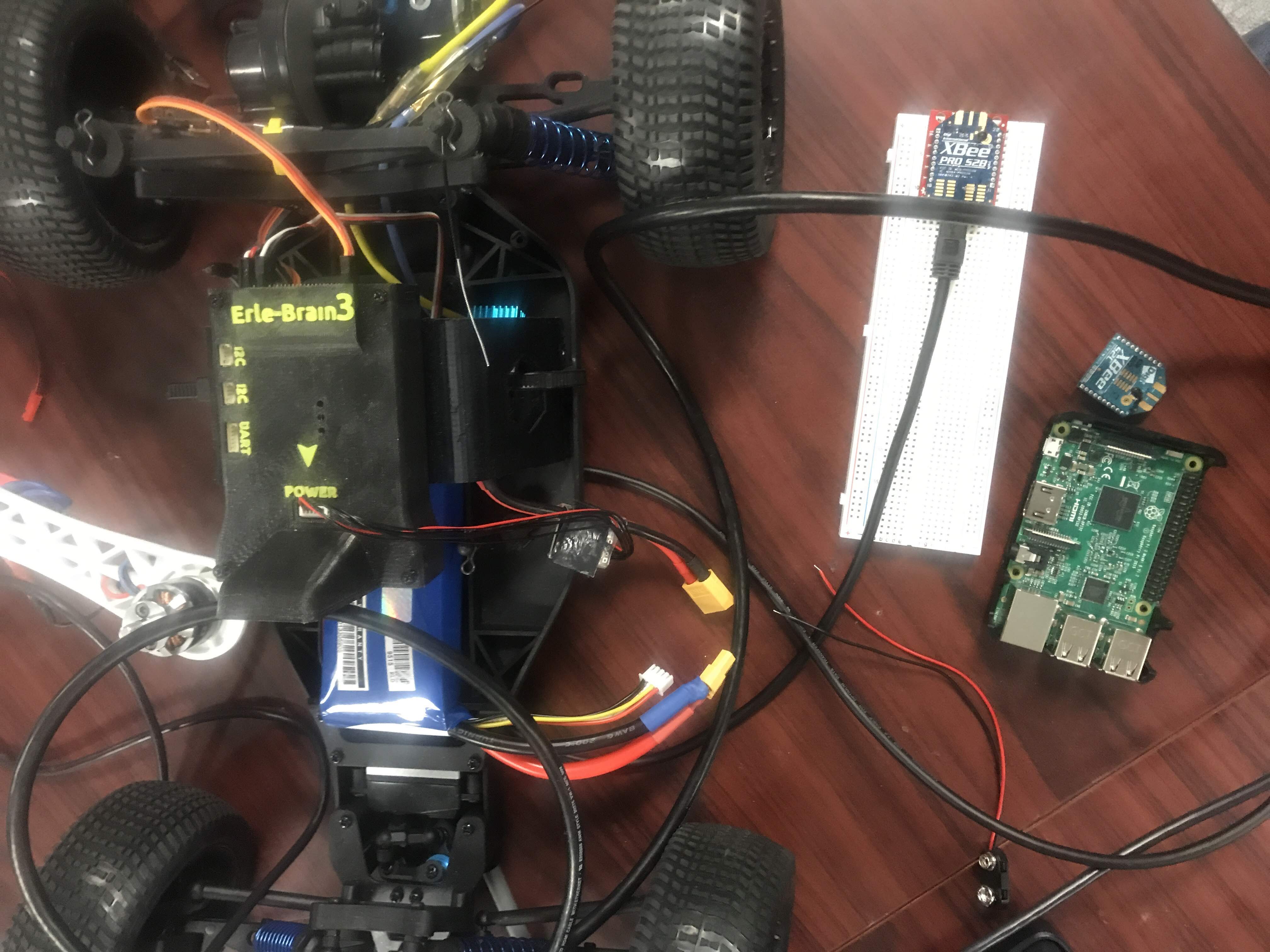

Search and Rescue Operations with Mesh Networked Robots

IEEE Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON)

2018

Columbia University; New York City, NY

T. Brodeur, P. Regis, D. Feil-Seifer, S. Sengupta

Abstract: Efficient path planning and communication of multirobot systems in the case of a search and rescue operation is a critical issue facing robotics disaster relief efforts. Ensuring all the nodes of a specialized robotic search team are within range, while also covering as much area as possible to guarantee efficient response time, is the goal of this paper. We propose a specialized search-and-rescue model based on a mesh network topology of aerial and ground robots. The proposed model is based on several methods. First, each robot determines its position relative to other robots within the system, using RSSI. Packets are then communicated to other robots in the system detailing important information regarding robot system status, status of the mission, and identification number. The results demonstrate the ability to determine multi-robot navigation with RSSI, allowing low computation costs and increased search-andrescue time efficiency.

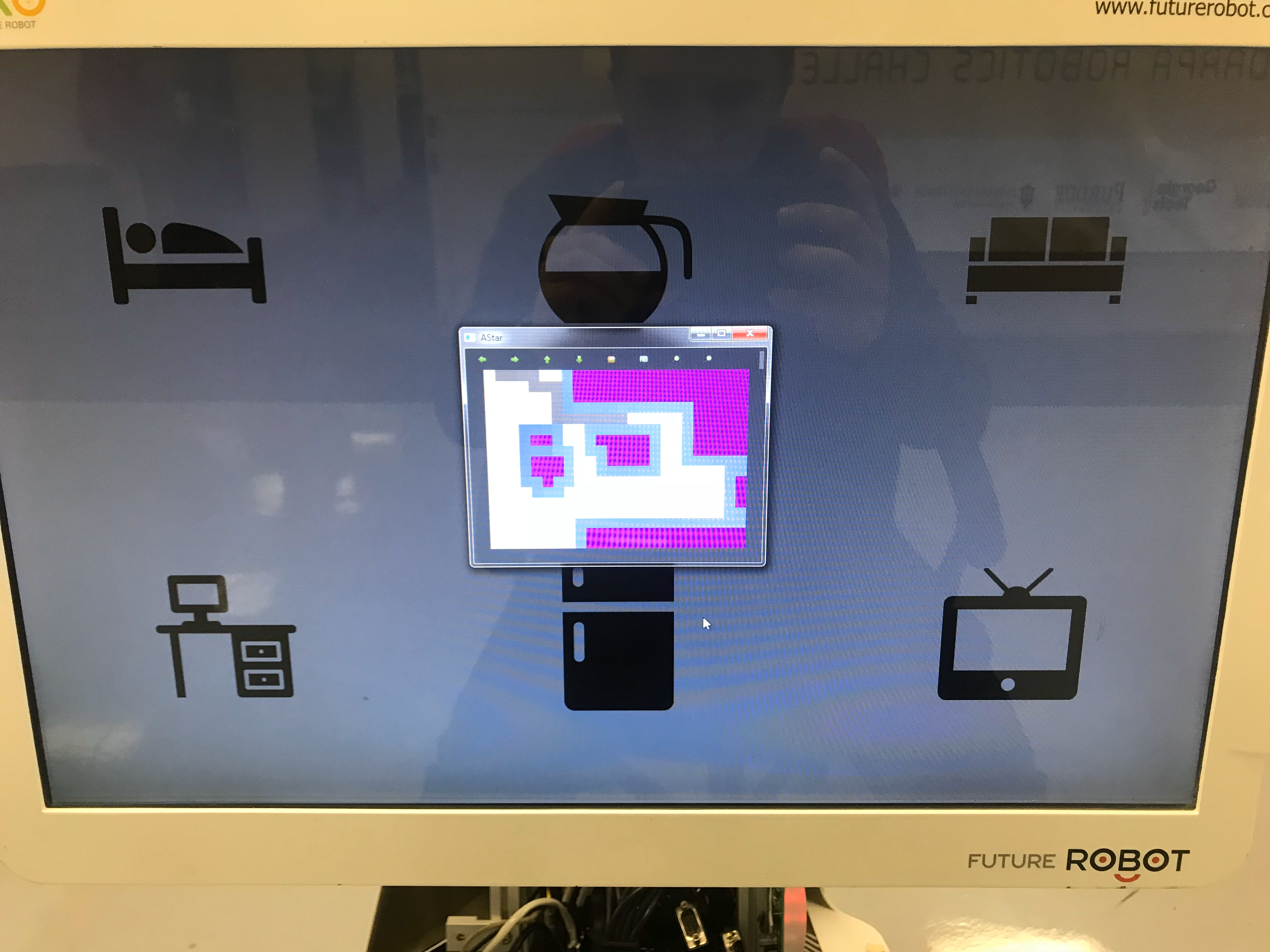

Directory Navigation with Robotic Assistance

IEEE Computing and Communication Workshop and Conference (CCWC)

2018

University of Nevada, Las Vegas; Las Vegas, NV

T. Brodeur, A. Cater, J. Chagas Vaz, P. Oh

Abstract: Malls are full of retail store and restaurant chains, allowing customers a vast array of destinations to explore. Often, these sites contain a directory of all stores located within the shopping center, allowing customers a top-down view of their current position, and their desired store location. In this paper, we present a customer centric approach to directory navigation using a service robot, where the robot is able to display the directory, and guide customers to needed destinations.